The DataCore Server (Formerly Known as FAQ 1348)

Explore this Page

- Overview

- Change Summary

- About the Best Practice Guide

- Design Objectives

- The Server Hardware

- Fibre Channel and iSCSI Connections

- General Performance Considerations

- RAID Controllers and Storage Arrays

- Storage Hardware Guidelines to Use with DataCore Servers

- The Operating System

- Upgrading the SANsymphony Software

- TCP/IP Networking

- Major SANsymphony Features

Overview

This help covers best practices and design guidelines for deploying and managing DataCore software and associated technologies.

Change Summary

Changes since May 2021

| Section(s) | Content Changes |

|---|---|

| All |

Updated Complete overhaul of the guide. |

Changes since August 2020

This document has been reviewed for 10.0 PSP12.

| Section(s) | Content Changes |

|---|---|

| All |

Updated Corrected links of the SANsymphony web help. Removed references of 10.0 PSP6 as it is now End of Life. |

About the Best Practice Guide

Each DataCore implementation will always be unique and giving advice that applies to every installation is therefore difficult. The information in this document should be considered as a set of ‘general guidelines’ and not necessarily strict rules.

DataCore cannot guarantee that following these best practices will result in a perfect solution – there are simply too many separate (and disparate) components that make up a complete SANsymphony installation, most of which are out of DataCore Software’s control – but these guidelines will significantly increase the likelihood of a more secure, stable, and high-performing SANsymphony installation.

This guide assumes that DataCore-specific terms - e.g., Virtual Disk, Disk Pool, CDP, Replication, etc. including their respective functions – will be understood. It does not replace DataCore technical training nor remove any need to use a DataCore Authorized Training Partner and assumes fundamental knowledge of Microsoft Windows and the SANsymphony suite.

Compatible SANsymphony Versions

This document applies to any SANsymphony 10.0 PSP9 and later versions (earlier versions are now end of life). Any recommendations that are version-specific will be clearly indicated within the text.

Also, refer to the End of life notifications for DataCore Software products for more information.

Design Objectives

The design objective of any SANsymphony configuration is an ‘end-to-end’ process (from the user to the data) as well as availability, redundancy and performance. None of these can be considered in isolation but as integrated parts of an entire infrastructure. The information in this document provides some high-level recommendations that are often overlooked or simply forgotten about.

Avoid Complexity

The more complex the design, the more likely unforeseen problems will occur. A complex design can also make a system difficult to maintain and support, especially as it grows. A simple approach is recommended whenever possible.

Avoid Single Points of Failure

Dependencies between major components (e.g., switches, networks, fabrics and storage arrays) can impact the entire environment if they fail.

- Distribute each component over separate IO boards, racks, separate rooms, separate buildings and even separate 'sites'.

- Keep storage components away from public networks.

- Avoid connecting redundant devices to the same power circuit.

- Use redundant UPS-protected power sources and connect every device to it. A UPS backup does not help much if it fails to notify a Host to shut down because that Host’s management LAN switch itself was not connected to the UPS-backed power circuit.

- Use redundant network infrastructures and protocols where the failure of one component does not make access unavailable to others.

- Do not forget environmental components. A failed air conditioner may collapse all redundant systems located in the same data center. Rooms on the same floor may be affected by a single burst water pipe (even though they are technically separated from each other).

- DataCore strongly recommends that third-party recommendations are followed, and that all components (hardware and software) are in active support with their respective vendors.

Set Up Monitoring and Event Notification

Highly available systems keep services alive even if half of the environment has failed but these situations must always be recognized and responded to promptly so that they can be fixed as soon as possible by the personnel responsible to avoid further problems.

Documentation

Document the environment properly, keeping it up-to-date and accessible. Establish 'shared knowledge' between at least two people who have been trained and are familiar with all areas of the infrastructure.

User Access

Make sure that the difference between a 'normal' server and a ‘DataCore Server’ is understood. A DataCore Server should only be operated by a trained technician.

Best Practices are Not Pre-requisites

Some of the Best Practices listed here may not apply to your installation or cannot be applied because of the physical limitations of your installation – for example, it may not be possible to install any more CPUs or perhaps add more PCIe Adaptors, or maybe your network infrastructure limits the number of connections possible between DataCore Servers and so on.

Therefore, each set of recommendations is accompanied by a more detailed explanation so that when there is a situation where a best practice cannot be followed, there is an understanding of what and how that may limit your SANsymphony installation.

Also, refer to the following documentation for more information:

The Server Hardware

OpenBIOS

Many server manufacturers have their own 'Low Latency Tuning' recommendations. DataCore always recommends following such recommend settings unless they contradict anything recommended below. Note that server manufacturers may use different names or terminology for the settings.

Collaborative Power Control

This should always be Disabled where possible as it overrides many of the BIOS settings listed in this section by the OS and its power plan.

Command per Clock (CPC Override/Mask)

This should be enabled as it can increase the overall speed of memory transfer.

Hemisphere Mode

Hemisphere mode can improve memory cache access both to and from the memory controllers, but the server hardware usually must have memory modules of the same specification to take advantage of this setting. This should be set to Automatic.

Intel Turbo Boost

Turbo Boost should be Disabled. This CPU feature allows over-clocking of individual cores for higher single-thread performance, while at the same time, other cores are put into a power- saving mode to not exceed the processor’s energy and heat budget. Those clock speed variations contradict SANsymphony’s Poller architecture which expects equal clock speeds on all cores of a system. The OS reschedules SANsymphony’s Poller threads on the best available cores multiple times per second. Core clock speed differences lead in extreme cases to massive differences in execution times, eventually leading to erratic behavior that impacts SANsymphony's I/O processing and performance.

Non-Uniform Memory Access (NUMA)

Snoop options - Enable the Early Snoop option (if available).

Cluster options - Disable all NUMA Cluster option settings.

In case the core count per socket exceeds 64 cores (if hyper-threading is on, this applies to the logical core count), then NUMA must be enabled.

Advanced Encryption Standard Instruction Set

This should be enabled as it is utilized for Virtual Disk encryption.

Secure Boot

This is supported with SANsymphony 10.0 PSP13 and newer, it must be disabled on older installations of SANsymphony.

Power Management Settings

Power saving/C-States - Disable any C-states ('C0' State) or power saving settings. SANsymphony relies on the CPUs being available all the time and not having to wait while the CPU is 'woken up' from an idle state. Those wake-up times lead to the same issues as described in paragraph Intel Turbo Boost.

Static High – If available, set the power management settings to static high to disable any additional CPU power saving.

OpenCPUs

All x64 processors (except for Intel’s Itanium family) are supported for use in a DataCore Server. DataCore recommends using ‘server-class’ CPUs than those intended for ‘workstation' use.

The Speed of CPUs

DataCore Software has not found any significant performance differences between CPU manufacturers for processors that share similar architectures and frequencies.

Even so, faster (i.e., higher frequency) CPUs are always preferred over slower ones as they can process more instructions per second. DataCore also prefers using the fastest cores, when possible rather than more-but-slower cores. Please consult your server vendor to see if any additional CPU Sockets are necessary to be able to use all the available PCIe/Memory- Sockets on the server’s motherboard.

Hyper-Threading (Intel)

For Intel CPUs manufactured after 2014, DataCore recommends that Hyper-Threading is Enabled as testing has shown this can help increase the number of threads for SANsymphony's I/O Scheduler, allowing more I/O to be processed at once. For earlier Intel CPUs, DataCore recommends that Hyper-Threading be Disabled as testing has shown that older CPUs operate at a significantly lower clock speed per hyperthreaded core and result in a disadvantage to the single thread performance expected by SANsymphony’s Poller threads.

What is the Recommended Number of CPUs in a DataCore Server?

At least 2 logical processors for the base SANsymphony Software are required. This is the absolute minimum requirement not allowing significant IO performance and is not recommended in production. Production grade systems should always have 4 or more logical processors configured following the below recommendations for optimal performance at the lowest possible latency.

- 1 additional logical processor for each fibre channel port.

- 3 additional logical processors for each pair of iSCSI ports. Software ISCSI connections in general have a much larger overhead compared to fibre channel ports, mainly due to the extra work required encapsulate/de-encapsulate of SCSI data to/from IP packets.

- 1 additional logical processor for both the Live and Performance Recording features.

- 2 additional logical processors for the (Asynchronous) Replication feature.

- 1 additional logical processor per IO port to support Inline Deduplication and Compression (ILDC) under full load conditions.

Open Hyperconverged Virtual SAN

Running SANsymphony alongside Hyper-V (Windows only)

Only the Hosts are running in Virtual Machines, the SANsymphony software is still installed on a 'physical' server. Follow the recommendations in the previous section but consider that an additional logical processor may be needed to manage the Hyper-V software and Host VMs. Please consult Microsoft’s Hyper-V documentation for more information.

Running SANsymphony inside a Virtual Machine

The DataCore Servers and Hosts are all running on Virtual Machines. In this case, treat 'logical processor' requirements as 'virtual processor' (vCPU) requirements. Consideration must be taken though about the allocation of resources to all other Hosts and how the resources of the Hypervisors 'physical' CPUs are shared between Host VMs and the VMs used for DataCore Servers. Even if the same number of vCPUs were to be created to match those of an equivalent physical DataCore Server, there is still no guarantee that all these vCPUs would be used at the same rate and throughput as physical CPUs. Make sure to guarantee CPU and RAM access for the SANsymphony Virtual Machine at any given time by setting up appropriate resource reservations.

Also, refer to DataCore Hyperconverged Virtual SAN Best Practices Guide for more information.

OpenPower

Use redundant and uninterruptable power supplies (UPS) whenever possible. Refer to UPS Support for more information.

OpenSystem Memory (RAM)

The Amount of System Memory

The minimum amount of memory for a DataCore Server is 8GB. The recommended amount of memory for a DataCore Server depends entirely on the size and complexity of the SANsymphony configuration.

Use the ‘DataCore Server Memory Considerations’ document available from the Support Website to calculate the memory requirement for the type, size, and complexity of the SANsymphony configuration, always allowing for plans of future growth.

Also, refer to DataCore Server Memory Considerations for more information.

The Type of System Memory

To avoid any data integrity issues while I/O is being handled by the DataCore Server’s own Cache, ECC Memory Modules should be used.

If a Server CPU uses NUMA architecture, then all the physical memory modules should have the same specification. See the NUMA Group Size Optimization and Hemisphere Mode entries from the BIOS section for more information.

OpenA Summary of Server Hardware Recommendations

BIOS

- Collaborative power control should be disabled.

- CPC Override/Mask should be enabled.

- Hemisphere Mode should be set to Automatic.

- Intel Turbo Boost should be disabled.

- NUMA Group Size Optimization/Node Interleaving should be enabled and set to Flat (if the option is available).

- Advanced Encryption Standard instruction set support should be enabled.

- Secure Boot should be disabled for PSP12 and earlier.

- Power saving (C-states) should all be disabled but Static High should be enabled.

CPU

Generally

- Use ‘server class’ processors.

- Use less-but-faster cores rather than more-but-slower cores.

- Enable Hyper-Threading (Intel) on CPUs from 2014 or newer.

- Disable Hyper-Threading (Intel) on CPUs older than 2014.

Physical DataCore Servers

-

At least 4 logical processors for the base SANsymphony Software.

You can install the software using just 2 logical processors, but this is a minimum requirement.

- 1 additional logical processor for each fibre channel port.

- 3 additional logical processors for each pair of iSCSI ports. Software ISCSI connections in general have a much larger overhead compared to fibre channel ports, mainly due to the extra work required encapsulate/de-encapsulate of SCSI data to/from IP packets.

- 1 additional logical processor for both the Live and Performance Recording features.

- 2 additional logical processors for the (Asynchronous) Replication feature.

- 1 additional logical processor per IO port to support ILDC.

Hyperconverged DataCore Servers

-

Running SANsymphony alongside Hyper-V (Windows only)

- Follow the recommendations as for Physical DataCore Servers with additional logical processors required for the Hyper-V software.

- Running SANsymphony inside a Virtual Machine

- Follow the recommendations as for Physical DataCore Servers (substitute 'logical' with 'virtual' processors) consideration must be taken for all other 'Host' VMs so that the DataCore Server VM and Host VMs are not competing for resources.

Power

- Use redundant power supplies.

- Use an uninterruptable power supply (UPS).

System Memory

- Refer to ‘DataCore Server Memory Considerations’ FAQ documentation for more information.

- Use ECC Memory.

- Enable CPC Settings

Fibre Channel and iSCSI Connections

Multi-ported vs Single-ported Adaptors

Fibre Channel host bus adapters (HBA) and network interface cards (NICs) are often available in single, dual, and quad-port configurations but often there can be very little difference in performance capabilities when comparing two single-ported adaptors with one dual-port adaptor, or two dual-port HBAs with one quad-port HBA1.

There is however a significant implication for high availability when using a single adaptor - even if it has multiple ports in it - as most types of adaptor failures will usually affect all ports on it rather than just one (or some) of them.

Using many adaptors that have a small number of ports on them will reduce the risk of multiple port failures happening at the same time.

iSCSI Connections

Link Aggregation and SCSI Load Balancing

For better performance use faster, separate network adaptors and links instead of teaming multiple, slower adaptors. For high availability use more individual network connections and multipath I/O software than rely on either teaming or spanning tree protocols to manage redundancy. Just like Fibre Channel environments, that use independent switches for redundant fabrics, this also prevents ‘networkloops’ making spanning tree protocols obsolete and simplifies the overall iSCSI implementation.

Fundamentally, SCSI load-balancing and failover functions are managed by Multipath I/O protocols2; TCP/IP uses a completely different set of protocols for its own load-balancing and failover functions. When SCSI commands, managed by Multipath I/O protocols but ‘carried’ by TCP/IP protocols are combined (i.e., iSCSI), then the interaction between the two protocols for the same function can lead to unexpected disconnections or even complete connection loss.

NIC Teaming

NIC teaming is not recommended for iSCSI connections as it adds more complexity (without any real gain in performance); and although teaming iSCSI Targets - i.e. Front-end or Mirror ports - would increase the available bandwidth to that target, it still only allows a single target I/O queue rather than, for example, two, separate NICs which would allow two, independent target queues with the same overall bandwidth.

Secondary/Multiple IP-Addresses per NIC

SANsymphony’s iSCSI Target implementation binds to the IP Address rather than to the MAC Address of a NIC. This means multiple IP-Addresses per NIC can bind multiple iSCSI Targets to the same NIC Hardware. This allows the system to scale iSCSI IO performance to match the capabilities of the NIC. Each iSCSI target instance can process up to 80k IO/s. A 10 GbE NIC Port would support up to 160k IO/s from the physical interface specification. This means it makes sense to apply up to 3 IP-Addresses per 10 GbE NIC for maximum saturation of a NIC.

Additional CPU cores may be required for high iSCSI target counts.

iSCSI Loopback Connections

Do not connect iSCSI Initiator sessions to iSCSI Targets at the identical IP- and MAC-Address. Always make sure to have sessions between different physical MAC-Addresses, otherwise, the IP stack will compete for identical transmission buffers, leading to very high latency and low IO performance.

Unwanted iSCSI Targets

SANsymphony’s iSCSI Target automatically binds to all available IP-Addresses found on regular network interfaces. Sometimes users want to have no iSCSI bound to certain network ports and do not want to see unwanted iSCSI targets in the configuration. To remove unwanted iSCSI Targets permanently the appropriate DataCore Software iScsi Adapter instance in Device Manager/DataCore Fibre-Channel Adapters must be disabled. The instance can be identified by matching the MAC Address of the port in the Management Console with one of the instances of Device Manager. After disabling the instance in Device Manager, the iSCSI Port in SANsymphony can be safely deleted and will not re-appear unless the disabled adapter instance in Device Manager is re-enabled.

Spanning Tree Protocols (STP/RSTP/MSTP)

None of the Spanning Tree Protocols - STP, RSTP, or MSTP – are recommended on networks used for iSCSI as they may cause unnecessary interruptions to I/O; for example, other, unrelated devices generating unexpected network topology causing STP to re-route iSCSI commands inappropriately or even blocking them completely from their intended target.

Also, refer to the following documentation for more information:

- Qualified Hardware Components - DataCore and Host Servers

- Known Issues - Third-Party Hardware and Software

- SANsymphony - iSCSI Best Practices

A Summary of Recommendations for Fibre Channel and iSCSI Connections

Fibre Channel and iSCSI Connections

Use many adapters with a smaller numbers of ports on them as opposed to fewer adapters with larger numbers of ports.

ISCSI Connections

- Use faster, separate network adaptors instead of NIC teaming.

- Do not use NIC teaming or STP protocols with iSCSI connections. Use more individual network connections (with Multipath I/O software) to manage redundancy.

- Use independent network switches for redundant iSCSI networks.

- Add multiple IP-Addresses to NICs to scale iSCSI Targets.

- Do not connect Loopback iSCSI sessions between the same IP and MAC.

- Unwanted iSCSI Targets can be removed by disabling the DataCore Software iSCSI Adapter instance in Device Manager/DataCore Fibre-Channel Adapters.

General Performance Considerations

What to Expect from Fibre-Channel

With 16 GBit or faster Fibre-Channel boards a CPU, thread is capable of forwarding 250k IOps per port. If the performance requirement is less, then multiple FC ports can share a single thread. Scale the number of ports and cores according to the actual performance requirements of the targeted environment.

What to Expect from iSCSI

A CPU thread, depending on clock speed, is capable of forwarding 60k-80k IOps per virtual iSCSI target portal. If the performance requirement is less, then multiple iSCSI target portals can share a single thread. It is possible to have multiple IP addresses per NIC to bind multiple iSCSI target portals to fast physical interfaces to maximize the utilization of the connections.

Port Scaling Considerations

When it comes to port scaling it is always good to have any functional port role at a redundant layout per SANsymphony server. Ports are identified for Front-End, Mirror, and Back-End roles. Having at least 2 of each gives a good level of resiliency within the server and prevents mirrored Virtual Disks to fail or failover to a remote SANsymphony server.

While it is possible to share multiple roles per port it is not recommended to do it. The only roles to eventually be shared are Mirroring and Back-End on the same port because Back- End performance requirement is usually reduced by cache optimization and so it may coexist with the requirements for the mirror traffic.

Front-End roles should always be exclusive per port to guarantee non-blocking access to the cache.

When Front-End is scaled up with additional ports, then Mirroring should be scaled as well.

As a rule of thumb, try not to have more than 50 initiators logging into a single target port. SANsymphony technically supports way more, but all initiators will compete for the bandwidth, and under high load conditions some hosts may lose the competition and suffer from unexpectedly high latency.

In Fibre-Channel environments try not to have more than 2 initiator line speeds connecting to a target to prevent suffering from “slow drain” effects during high load conditions in the entire fabric.

Scale Up vs. Scale Out

Scale up means adding more to a single SANsymphony Server in a Server Group.

Scale-out means adding more SANsymphony Servers to a Server Group up to 64 Servers in total.

Scale-out also may mean having multiple SANsymphony Server Groups in a single Datacenter to have the ability to operate on different code revisions and to apply application- specific Service Level Agreements (SLA) more differentiated than within only a single instance of federated Servers.

While it may make sense to add as much as possible to the responsibility of a single Server, it may be wise to spread the risk to more than just 2 Servers for high availability and distribution of work. SANsymphony Server Groups operate as a federated grid of loosely coupled Servers. This means that each Server contributes to a parallel effort with all other Servers to achieve the desired goal in performance and availability. With just 2 Servers 100% of the presented Virtual Disk mirrors will lose redundancy as soon as one of the Servers is compromised. With 4 Servers this risk is already down to 50%, plus it is possible to have 3-way mirrors which tolerate the loss of a mirror member and still stay highly available with 2 out of 3 data copies in case of a Server outage. The more Servers are exist in a Server Group the more the risk reduces. It is always a question of “how many eggs to carry in a single basket”!

A similar situation exists from the storage pool perspective. While it is possible to scale a single pool up to 8 PB in capacity, it may not be wise to do so, because as soon as a single backend device of a pool fails, the pool turns offline and leads to Virtual Disk mirrors to losing redundancy.

Furthermore, a single pool has a limited IO performance as it is a single-performance instance, while multiple pools scale performance out in parallel.

RAID Controllers and Storage Arrays

The DataCore Server's Boot Disk

Configure the DataCore Server’s own Boot Disk for redundancy. It is recommended to use RAID 1, as this is usually simpler (less complex to configure and has less overhead than RAID 5). It is not recommended to use the same Storage Array controller for the Boot Disk and Pool Disks.

Disks Used for DataCore Disk Pools

RAID and Storage Array controllers used to manage physical disks in Disk Pools need to be able to handle I/O from multiple Hosts connected to the DataCore Server; so, for high performance/low latency hardware, use independent buses or backplanes designed for ‘heavy’ workloads (‘workstation’ hardware is not usually designed for such workloads).

A low-end RAID controller will deliver low-end performance. An integrated (onboard) RAID controller that is often supplied with the DataCore Server may only be sufficient to handle just the I/O expected for the boot drive. Controllers that have their dedicated CPU and cache can manage much higher I/O workloads and many more physical disks. Consult with your storage vendor about the appropriate controller to meet your expected demands.

Intel’s VROC technology (Virtual RAID on CPU) has been tested and qualified as an appropriate high-performance solution for NVMe RAID architectures and can be used with SANsymphony’s storage pools. Availability depends on support from the chosen server vendor, supported NVMe devices, and processor requirements for VROC.

If both 'fast' and 'slow' disk types share the same disk controller in the storage array (e.g., an SSD sharing the same disk controller as a SAS RAID5 set), then the slower disks on that controller can hold up I/O to the faster disks. DataCore’s recommendation is to have a separate disk controller for each differential disk speed type. If there is no choice but to mix different disk speed types on the same disk controller - for example mixing SSD with SAS - then in this example, make sure the SAS disk has 'no RAID' (or RAID0) configured and use SANsymphony's Disk Pool mirroring feature as this should be faster than hardware RAID mirroring.

Also, refer to the following documentation for more information:

- Mirroring Pool Disks

- The DataCore and Host Servers - Qualified Hardware

- Known Issues - Third-Party Hardware and Software

A Summary of Recommendations for RAID Controllers and Storage Arrays

RAID Controllers and Storage Arrays

- Use high-performance/low-latency hardware with independent buses or backplanes designed for ‘heavy’ workloads (‘workstation’ hardware is not usually designed for such workloads).

- Avoid using ‘onboard’ disk controllers for anything other than the DataCore Servers’ Boot Disks.

- Intel VROC is qualified for SANsymphony storage pools.

- Configure the DataCore Server’s Boot Disk for RAID1.

- Use a separate controller for physical disks used in either Disk Pools or for the Replication buffer.

- If mixing fast and slow disks on the same controller, then use Disk Pool mirroring instead of hardware RAID on the slower disks.

Storage Hardware Guidelines to Use with DataCore Servers

Overview

Any Storage Array that is capable of providing SCSI-based disk devices to Microsoft Windows (on which SANsymphony runs), formatted with a 512 byte block size (any version) or 4k block size (versions 10.0.5 and later) can be used in the DataCore pools.

Storage that uses Disk Devices that are marked as "Removable" within Windows' Disk Management cannot be used.

Which specific Storage Arrays are 'qualified' for use with SANsymphony?

DataCore do not qualify specific Storage Arrays, as long as the criteria mentioned above in the previous section are met, all Storage Arrays are considered 'qualified'. However, not all Storage Arrays can be used with the SANsymphony Shared Multi-Port Array (SMPA) function; see the appropriate section on SMPA configurations below.

Some Storage Arrays do have specific configuration requirements to make them function correctly with SANsymphony. Refer to

Known Issues for Third Party Components FAQ to see if your specific Array is mentioned.

Also some DataCore Vendors have their own Storage Solutions that they have tested and qualified as 'DataCore-Ready'. See DataCore Ready Partners for those Vendors.

Using the DataCore Fibre Channel Back-end Driver

A Storage Array that is connected to a DataCore Server using the DataCore Fibre Channel Back-end driver can also use DataCore's inbuilt back-end failover function as long as the LUNs from the Storage Array are connected to different back-end paths and/or different controllers on the Storage Array and the controllers are in an Active/Active configuration.

For a complete list of all Fibre Channel Host Bus Adapters that can use the DataCore Fibre Channel Driver Back End. Refer to the Qualified Hardware Components FAQ for more information.

Storage Arrays Connected without using the DataCore Fibre Channel Back-end Driver

A Storage Array that is connected to a DataCore Server but without using the DataCore Fibre Channel back-end driver can still be used. This includes SAS or SATA-attached, all types of SSD, iSCSI connections and any Fibre Channel connection using the Vendor's own driver.

Any storage that is connected in this way will appear to the SANsymphony software as if it were an 'Internal' or 'direct-attached' Storage Array and some SANsymphony functionality will be unavailable to the user including not being able to make use of SANsymphony's performance tools to get some of the available performance counters related to Storage attached to DataCore Servers. Also some potentially useful logging information regarding connections between the DataCore Server and the Storage Array is lost (i.e. not being able to monitor any SCSI connection alerts or errors directly) and may hinder some kinds of troubleshooting, should any be needed. Since there is no DataCore driver communicating with the storage, refer to the vendor's documentation on configuring the storage with a Windows Server (the operating system that DataCoreSANsymphony resides on) for instructions. If the Windows operating system can access the LUN, SANsymphony should be able to use it in a pool provided it meets the disk requirements.

Storage Arrays Connected by ISCSI

SANsymphony does not have an iSCSI initiator driver. Refer to the Storage Arrays Connected without using the DataCore Fibre Channel Back-end Driver section above.

Storage Arrays that are 'ALUA-capable'

The DataCore Fibre Channel back-end driver is not ALUA-aware so will ignore any ALUA-specific settings sent to it by the Storage Array. However SANsymphony can still provide ALUA-capable Virtual Disks to Hosts regardless if the Storage Array attached to the DataCore Server is ALUA-capable or not.

Using a Third-Party Failover Product

Third-Party Failover product (For Example: Branded MPIO) can be used directly on the DataCore Server. In the case where Storage Arrays are attached by Fibre Channel connections, do notuse the DataCore Fibre Channel back-end driver when using any Third-Party Failover product - use the Third-Party's preferred Fibre Channel Driver instead.

512b, 512(e)b, and 4Kbyte Sector Support

SANsymphony 10.0 PSP 5 and later included native support for Advanced Format Disks (AFD) on 4KByte sector format. Refer to 4 KiB Sector Support for more information.

Earlier versions of SANsymphony did not support 4Kbyte sectors but Advanced Format 512e (also known as '512 emulation') could be used instead. Refer to your disk manufacturer for more details.

NVMe Storage

NVMe storage is supported on SANsymphony 10.0 PSP 2 or later.

RAID Stripe and Striped-set Sizing

Normal Disk Pools

This setting is only an important consideration when using RAID 5–configured storage. For RAID 1 (or RAID 10), the stripe-set size has no impact because parity calculations are not required.

I/O requests to SANsymphony Disk Pool disks ranged from 4 KiB to 1 MiB. SANsymphony aggregates multiple smaller I/O requests for each Virtual Disk up to a maximum size of 1 MiB.

Although it is not possible to predict the exact size of an I/O request at any given moment, general recommendations for RAID stripe sizes can be derived using the following method:

-

Determine the number of data disks

Calculate the total number of disks in the RAID set and subtract the disks used for parity.

-

Example:

- A 3-disk RAID 5 set uses 2 disks for data and 1 for parity.

- A 4-disk RAID 6 set also uses 2 disks for data and 2 for parity.

-

Example:

- Calculate the stripe size

- Divide the maximum I/O size (1 MiB) by the number of data disks.

- Example: In both cases above, each RAID set has 2 data disks, so the recommended stripe size is 1 MiB ÷ 2 = 512 KiB per data disk.

Capacity Optimized Disk Pools

Disks used in Capacity-Optimized Disk Pools (with deduplication and compression, powered by ILDC/ZFS) should use a stripe size of 128 MiB, which matches the maximum write size of the underlying ZFS filesystem.

Additionally:

- Disk devices used for L2ARC or Mirrored Special Devices should not be part of a RAID set, to avoid unnecessary performance overhead.

- Refer to Best Practices – Dedupe and Capacity Optimization (ILDC) document from DataCore FAQ page for more information.

Multiple LUNs Created from a Single RAID Set

DataCore recommends not creating multiple logical volumes (LUNs) from a single RAID set for use as Disk Pool disks.

SANsymphony distributes I/O requests across all disk devices in a Disk Pool. For example, this includes Virtual Disks that share the same storage profile. If multiple logical disks originate from the same RAID set, this can cause disk thrashing, a condition in which a single physical disk must process multiple concurrent I/O requests from different Virtual Disk sources. This behavior can degrade overall performance.

Even with SSD-based RAID, disk thrashing can still occur, although the impact is significantly lower than with HDD-based RAID, which has higher seek times and therefore more noticeable performance degradation.

Storage Arrays and SANsymphony Shared Multi-Port Array Configurations

While the preferred Storage Array solution for SANsymphony is to have each LUN from each Storage Arrays presented to each DataCore Server individually (for maximum redundancy), it is possible to 'share' the same LUN from the same Storage Array on all DataCore Servers in the same Server Group at the same time. This can be useful when using the Maintenance Mode feature of the software.

This type of configuration is known as a Shared Multi-Port Array (SMPA) configuration. This configuration though is less redundant - as the Storage Array itself becomes the Single Point of Failure. If dual type virtual disk are created these do not use SANsymphony's own Write Back caching for Write IO (Read IO is still cached). Not every Storage Array is supported for use in this type of configuration. Otherwise all of the storage guidelines listed above apply to SMPA storage as well.

For a complete list of all Storage Arrays that can be configured for use with the SANsymphony SMPA function. Refer to Shared Multi-Port Array Tested Configurations FAQ for more information.

The Operating System

Microsoft Windows

On Server Vendor Versions of Windows

DataCore recommends using non-OEM versions of Microsoft Windows where possible to avoid the installation of unnecessary, third-party services that will require extra system resources and so potentially interfere with SANsymphony.

'R2' Editions of Windows

Only install R2 versions of Windows that are listed either in the 'Configuration notes' section of the SANsymphony release notes that are currently installed or in the SANsymphony Component Software Requirements section.

Windows System Setting

Synchronize all the DataCore Server System Clocks with Each Other and Connected Hosts

While the system clock has no influence on I/O - from Hosts or between DataCore Servers - there are some operations that are, potentially, time-sensitive.

- SANsymphony Console Tasks that use a Scheduled Time trigger. Refer to Automated Tasks for more information.

- Continuous Data Protection retention time settings. Refer to Continuous Data Protection (CDP) for more information.

- 'Temporary' license keys e.g., license keys that contain a fixed expiration date (e.g., for trial, evaluation or migration purposes).

- 'Significant' differences between DataCore Server system clocks can generate unnecessary 'Out-of-synch Configuration' warnings after a reboot of a DataCore Server in a Server Group. Refer to Out-of-synch Configurations for more information.

It is also recommended to synchronize all of the host’s system clocks as well as any SAN or Network switch hardware clocks (if applicable) with the DataCore Servers. This can be especially helpful when using DataCore’s VSS on a host but also generally to help with any troubleshooting where a host’s system logs need to be checked against those of a DataCore Server. Many ‘SAN events’ often occur over very short periods (e.g., Fibre Channel or ISCSI disconnect and reconnection issues between Hosts and DataCore Servers).

Power Options

Select the High-Performance power plan under Control Panel\Hardware\Power Options. Where not set, the SANsymphony installer will attempt to set this. Remember some power options are also controlled directly from within the server BIOS. Refer to the BIOS section for more information.

Startup and Recovery/System Failure

The SANsymphony installer will by default enable DataCore-preferred settings automatically. No additional configuration is required.

Virtual Memory/Page File

SANsymphony does not use the page file for any of its critical operations. The default size of the page file created by Windows is determined by the amount of Physical Memory installed in the DataCore Server along with the type of memory dump that is configured. This can lead to excessively large page files filling up the boot drive.

The SANsymphony installer will change the memory dump type to Kernel Memory Dump to ensure if any crash analysis is required from the DataCore Server and that the correct type of dump file is generated.

Manually enter a custom value for the page file size as large as is practically possible (for your boot disk) by unchecking the ‘Automatically manage paging file size for all drives’ option. To calculate an appropriate size, check for the memory not used by SANsymphony’s cache and adjust the page file to this or a smaller number fitting the boot disk and leave a comfortable space to store a dump.

Enable User-Mode Dumps

User-mode dumps are especially useful to help analyze problems that occur for any SANsymphony Management or Windows Console issues (i.e. non-critical, Graphical User Interfaces) if they occur.

- Open regedit and browse to "HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\Windows Error Reporting".

- Add a new 'Key' under 'Windows Error Reporting' and rename it as 'LocalDumps'.

- Add a new REG_DWORD with a Name of 'DumpType' and a Data value of '2'.

- Close 'regedit' to save the changes.

Refer to the Collecting User-Mode Dumps documentation for more information.

DataCore Software and Microsoft Software Updates

Automatic or Manual Updates?

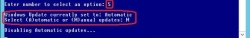

Never configure a SANsymphony node to apply updates automatically as this will result in the DataCore Server being rebooted in an 'unmanaged' way and this could cause unexpected full recoveries or loss of access to Virtual Disks.

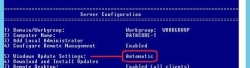

How to configure manual Windows updates:

- Open an administrative cmd or PowerShell window and run the command 'sconfig'.

- Locate the current 'Windows Update Settings'.

- If the current setting is 'Automatic', then enter option '5' and then when prompted, enter 'M' (for manual) and press enter:

- Wait for the setting to be applied and when prompted, click OK.

- Verify that the Windows Update Setting value is now 'Manual'.

- Enter '15' to exit the 'sconfig' utility and close the window.

Windows Security Updates

Please refer to the SANsymphony software release notes to know about any updates that are known to cause issues or need additional consideration before installing. Generally, DataCore recommends that you always apply the latest security updates as they become available. Refer to Prerequisites for more information.

- DataCore recommends that you do not apply 'Preview' rollups unless specifically asked to do so by Technical Support.

- DataCore recommends that you do not apply third-party driver distributed by Windows updates (example: Fibre Channel drivers).

- Occasionally, Microsoft will make hotfixes available before they are distributed via normal Windows Update. If a hotfix is not listed in the 'SANsymphony Component Software Requirements' section of “DataCore™ SDS Prerequisites”, and it is not being distributed as part of a normal Windows software update, then do not apply it.

- For more help, please contact DataCore Technical Support for advice.

Applying Windows Updates

Before installing Windows Updates, DataCore recommends that first, the SANsymphony server be stopped from the Management Console, then the DataCore Executive Service be stopped and the startup type set to "Manual".

Windows Updates often involve updates to .NET, WMI, MPIO, and other key Windows features that SANsymphony relies on. By stopping the server and service, it ensures that the patch installation has no negative effect on the performance of the server being patched.

After patching, DataCore recommends that the server be rebooted (even if Windows Updates do not prompt for it) and then check for further updates. If no additional updates are required, start the DataCore Executive Service, and change the startup type back to "Automatic" (or "Automatic (Delayed Start)") as it was before the patch process.

Third-Party Software

It is recommended not to install third-party software on a DataCore Server. SANsymphony requires significant amounts of system memory as well as CPU processing; it will also prevent certain system devices (e.g., Disk devices) from being accessed by other software components that may be installed on the DataCore Server which may lead to unexpected errors from those other software components.

The purpose of the DataCore Server should not be forgotten and trying to run the DataCore Server as a Domain Controller or as a Mail Server/Relay for example, as well as SANsymphony, must not be done as this will affect the overall performance and stability of the DataCore Server. DataCore recognizes that ‘certain types’ of third-party software are required to be able to integrate the DataCore Server onto the user’s network. These include:

- Virus scanning applications

- UPS software agents

- The server vendor’s own preferred hardware and software monitoring agents

In these few cases, and as long as these applications or agents do not need exclusive access to components that SANsymphony needs to function correctly (i.e., Disk, Fibre Channel, or iSCSI devices), then it is possible to run these alongside SANsymphony.

Always consult the third-party software vendor for any additional memory requirements their products may require and refer to the ‘Known Issues - Third-party Hardware and Software’ document for any potential problems with certain types of third-party software that have already been found to cause issues or need additional configuration. DataCore Support may ask for third-party products to be removed to assist with Troubleshooting.

Also, refer to the following documentation for more information:

- Changing Cache Size

- DataCore Server Memory Considerations

- Known Issues - Third-party Hardware and Software

- Qualified Software Components

Upgrading (or Reinstalling) Microsoft Windows

Versions of Windows that have passed a qualification for a specific version of SANsymphony will be listed in both the SANsymphony Software release notes and the SANsymphony minimum requirements page. Never upgrade ‘in-place’ to a newer version of the Windows operating system, for example upgrading from Windows 2012 to Windows 2016 or upgrading from Windows 2012 to Windows 2012 R2; even if the newer version is considered qualified by DataCore the upgrade will stop the existing SANsymphony installation from running. Instead of an in-place upgrade, the DataCore Server’s operating system must be installed ‘as new’. R2 versions of a particular Windows Operating System also need to be qualified for use on a DataCore Server. Any ‘R2’ versions of Windows that have passed a qualification for a specific version of SANsymphony will be listed in both the SANsymphony Software release notes and the SANsymphony minimum requirements page.

Also, refer to the following documentation for more information:

- How to reinstall or upgrade the DataCore Server's Windows Operating System

- Prerequisites

- Software Downloads and Documentation - SANsymphony release notes

A Summary of Recommendations for the Operating System

The Windows Operating System

- Use non-OEM versions of Microsoft Windows where possible.

- Only use 'R2' versions of Windows that are listed in the Release Notes or on the Pre-requisites page from the DataCore Website.

Windows System Settings

- Synchronize the system clocks between all DataCore Servers in the same Server Group, any associated Replication Groups, and all Hosts.

- Use the High-Performance power plan.

- Enable user-mode dumps for any errors that may occur when using the SANsymphony Management Console.

- Do not use the default Page File settings, adjust Page File size to a value equal or smaller to what is NOT used by SANsymphony’s cache.

Windows Service Packs, Security Updates, Software Updates, and Rollups

- Do not configure the DataCore Server to apply updates automatically, always apply Windows updates manually.

- Always apply the latest security, software updates, and/or monthly rollup updates as they become available.

- Do not apply preview rollups.

- Do not apply hotfixes that are not included as part of a normal Windows Update Service and are not listed in the SANsymphony minimum requirements web page.

- Do not apply any Windows updates for (third-party) drivers without first contacting DataCore Technical Support.

- Do not install Windows updates without first stopping the SANsymphony node and DataCore Executive Service

Installing Third-Party Software on the DataCore Server

It is recommended not to install third-party software on a DataCore Server.

Upgrading (or Reinstalling) the Windows Operating System

- Only use versions of the Windows operating system that have been qualified.

- Never upgrade ‘in place’ newer versions of the Windows operating system.

- Only use R2 versions of Windows that have been qualified.

- Never upgrade ‘in place’ versions of Windows to its R2 equivalent.

Upgrading the SANsymphony Software

All the instructions and considerations for updating existing versions of SANsymphony or when upgrading to a newer, major version of SANsymphony are documented in the SANsymphony Software release notes.

The SANsymphony Software release notes are available either as a separate download or come bundled with the SANsymphony software.

It is recommended that upgrades are performed by a SANsymphony DCIE.

Also, refer to the following documentation for more information:

TCP/IP Networking

SANsymphony’s Console, the VMware vCenter Integration component Replication and the Performance Recording function (when using a remote SQL Server) all use their own separate TCP/IP session.

To avoid unnecessary network congestion and delay as well as losing more than one of these functions at once should any problems occur with one or more network interfaces, we recommend using a separate network connection for each function.

The SANsymphony Server Group

The Controller Node

Where a Server Group has two or more DataCore Servers in it, one of them will be designated as the controller node for the whole group.

The controller node is responsible for managing what is displayed in the SANsymphony Console for all DataCore Servers in the Server Group – for example, receiving status updates for the different objects in the configuration for those other DataCore Servers (e.g. Disk Pools, Virtual Disks, and Ports, etc.), including the posting of any Event messages for those same objects within the SANsymphony console.

The controller node is also responsible for the management and propagation of any configuration changes made in the SANsymphony Console regardless of which DataCore Server’s configuration is being modified and makes sure that all other DataCore Servers in the Server Group always have the most recent and up-to-date changes.

The ‘election’ of which DataCore Server is to become the controller node is decided by the SANsymphony software automatically and whenever

- A DataCore Server is removed from or added to the existing Server Group

- The existing controller node is shutdown

- The existing controller node becomes ‘unreachable’ via the TCP/IP network to the rest of the Server Group (e.g., an IP Network outage, or a clock change sufficient to require a reconnection of the WCF connection between nodes).

The decision on which DataCore Server becomes the controller node is decided automatically between all the Servers in the Group and cannot be manually configured.

It is also important to understand that the controller node does not manage any Host, Mirror, or Back-end I/O (i.e., in-band connections) for otherDataCore Servers in the Server Group. In- band I/O is handled by each DataCore Server independently of the other Servers in the Server Group, whether it is the elected controller or not. Nor does it send or receive Replication data configured for another DataCore Server in the same Server Group, although it will manage all Replication configuration changes and Replication status updates whether it is the Source Replication Server or not.

The Connection Interface Setting

Except for iSCSI I/O, all other TCP/IP traffic sent and received by a DataCore Server is managed by the SANsymphonyConnection Interface setting.

This includes:

- When applying SANsymphony configuration updates to all servers in the same Server Group.

- Any UI updates while viewing the SANsymphony Console, including state changes and updates for all the different objects within the configuration (e.g., Disk Pools, Virtual Disks, Snapshots and Ports, etc.).

- Configuration updates and state information to and from remote Replication Groups.

- Configuration updates when using SANsymphony’s VMware vCenter Integration component.

- SQL updates when using a remote SQL server for Performance Recording.

The Connection Interface’s default setting (‘All’) means that SANsymphony will use any available network interface on the DataCore Server for its host name resolution, this is determined by the Windows operating system and how it has been configured and connected to the existing network.

It is possible to change this setting and select an explicit network interface (i.e., IP Address) to use for hostname resolution instead, but this requires that the appropriate network connections and routing tables have been set up correctly and are in place. SANsymphony will not automatically retry other network connections if it cannot resolve to a hostname using an explicit interface.

We recommend leaving the setting to ‘All’ and using the appropriate ‘Hosts’ file or DNS settings to control hostname resolution.

In case this is set to a specific IP Address and this address disappears from the system, then no configuration changes are possible (including a change to the address setting) unless this address re-appears.

Running the SANsymphony Management Console

On the DataCore Server

Even if the SANsymphony Management Console is used to log into one of the other DataCore Servers in the group (i.e. an ‘unelected node’) that other server will still connect directly to the controller node to make configuration changes or to display the information in its own SANsymphony Console.

This means that all DataCore Servers in the same Server Group must have a routable TCP/IP connection to each other and a working mutual name resolution so that if the controller node ‘moves’ to a different server, then the new controller node must also be able connect to all the remaining DataCore Servers in the group 1.

On a Workstation

Workstations that only have the SANsymphony Console component installed cannot become ‘controller nodes’ and never directly send or receive configuration information for any Server Group they connect to. Just like an ‘unelected’ node, the workstation will connect to the controller node to make configuration changes or to display the information in its own SANsymphony Console.

This means that even if the workstation is on a separate network segment from the DataCore Servers (e.g., in a different vLAN) it must still be able to send and receive TCP/IP traffic to and from all the DataCore Servers in that vLAN and mutual name resolution must be in place.

Other IP-based Protocols

While it is technically possible to share ISCSI I/O, Replication data, and the SANsymphony Console’s inter-node traffic over the same TCP/IP connection, for performance as well as losing more than one of these functions at once, we recommend using dedicated and separate network interfaces for each iSCSI port.

NIC Teaming and Spanning Tree Protocols (STP/RSTP/MSTP)

The DataCore Server 'Management' Connection

For all TCP/IP traffic where Multipath I/O protocols are not being used (example: non-iSCSI traffic), we recommend using NIC teaming to provide redundant network paths to other DataCore Servers.

We also recommend that each NIC that is teamed is in its separate network and that ‘failover’ mode is used rather than ‘load balancing’ as there is no specific performance requirement for Inter-node communication as the TCP/IP and using ‘fail over’ mode means that configuring and managing the network connections and switches is simpler. It also makes troubleshooting any future connection problems easier as well.

iSCSI Connections

Refer to Fibre Channel and iSCSI Connections for more information.

Replication

Refer to Replication Settings for more information.

Windows Hosts File / DNS Settings

There is no preference for managing DataCore Server hostname resolution between using the local ‘Hosts’ file or DNS. Either method can be used, however, any unavailability of DNS will affect the ability of the SANsymphony nodes to communicate as well as connect remote management consoles.

DataCore does recommend however using Host Name resolution over just using IP addresses as it is easier to manage any IP address changes that might occur, planned or unexpected, by being able to simply update any ‘Hosts’ file or DNS entries instead of ‘reconfiguring’ a Replication group or remote SQL server connection for Performance Recording (i.e. manually disconnecting and reconnecting), which is disruptive.

When using a ‘Hosts’ file, do not add any entries for the local DataCore Server but only for the ‘remote’ DataCore Servers, and do not add multiple, different entries for the same server (e.g. each entry has a different IP address and/or server name for the same server) as this will cause problems when trying to (re)establish network connections. "The server name entered into the 'Hosts' file should match the "Computer name" for the node in the DataCore Management Console.

Firewalls and Network Security

The SANsymphony software installer will automatically reconfigure the DataCore Server’s Windows firewall settings to allow it to be able to communicate with other DataCore Servers in the same Server or Replication groups. No additional action should be required.

If using an ‘external’ firewall solution or another method to secure the IP networks between servers then refer to the ‘Windows Security Settings Disclosure’ for the full list of TCP Ports required by the DataCore Server and ensure that connections are allowed through.

Also, refer to Windows Security Settings Disclosure.

Summary of Recommendations for TCP/IP Networking

iSCSI Connections

Refer to iSCSI Connections for more information on iSCSI.

Other Required TCP/IP Connections

- Leave the DataCore Server’s Connection Interface setting as default (‘All’).

- Use either ‘Hosts’ or DNS settings to control all hostname resolutions for the DataCore Server.

- Use a managed ‘Hosts’ file (or DNS) instead of just using IP addresses.

- Any Windows updates and security fixes that are currently available from Microsoft’s Windows Update Service should be applied whenever possible.

- For Firewalls and other network security requirements. Refer to ‘Windows Security Settings Disclosure’.

TCP/IP Network Topology

- Ensure DataCore Servers in the same Server Group always have routable TCP/IP connections to each other.

- Ensure Workstations that are only using the SANsymphony Console always has a routable TCP/IP connection to all DataCore Servers in the Server Group.

- Ensure mutual name resolution works for DataCore Servers and management Workstations.

- If using SANsymphony’s VMware vCenter Integration component, ensure the server running the vCenter always has a routable TCP/IP connection to all DataCore Servers in the Server Group.

- Do not route ‘non-iSCSI’ TCP/IP traffic over iSCSI connections.

-

Use dedicated and separate TCP/IP connections for each of the following:

- The SANsymphony Console

- Replication transfer

- Performance Recording when connecting to a remote SQL server

- Use NIC teaming, in ‘failover’ mode, for inter-node connection redundancy for ‘non-ISCSI’ TCP/IP traffic with separate networks for each NIC.

- Use independent network switches for redundant TCP/IP networks used for inter-node communication between DataCore Servers.

Major SANsymphony Features

Disk Pools

Please note that the best practices for Disk Pools that are documented here may not always be practical (or possible) to apply to existing Disk Pools in your configuration but should be considered for all new Disk Pools.

These recommendations are also for optimal performance, not minimal capacity where a larger storage allocation unit (SAU) means less additional work that the Disk Pool must do to keep its internal indexes up to date, which results in better overall performance within the Disk Pool, especially for very large configurations.

While a larger SAU size will mean that there is often more, initial capacity allocated by the Disk Pool, the chance of newer Host writes needing to allocate yet more new SAUs will be less likely and will instead be written to one of the already allocated SAUs. The downside of larger SAU size is less granularity in data tiering, more overhead consumption for differential snapshots, and lower chance to successfully trim (reclaim) allocations at fragmented file-systems.

The following applies to all types of Disk Pools, including normal, shared, and SMPA Pools.

Also, refer to the About Disk Pools documentation for more information.

The Disk Pool Catalog

The Disk Pool Catalog is an index that is used to manage information about each Storage Allocation Unit's location, its allocation state, and its relationship to the storage source it is allocated for within the Disk Pool. The Catalog is stored on one or more of the physical disks in the Disk Pool - also used for SAU data - but in a location that only the Disk Pool driver can access. Each DataCore Server's Disk Pool has its own, independent Catalog regardless if the Disk Pool is shared or not. Information held in the Catalog includes:

- Whether an SAU is allocated to a Storage Source or not

- The Physical Disk within the Disk Pool where an allocated SAU can be located

- The Storage Source an allocated SAU 'belongs' to

- The Virtual Disk's Logical Block Address (LBA) that the allocated SAU represents when accessed by a Host.

Whenever an SAU is allocated, reclaimed, or moved to a new physical disk within the same Disk Pool, the Catalog is updated.

Catalog updates must happen as fast as possible to as not to interfere with other I/O within the Disk Pool; for example, if the Catalog is being updated for one SAU allocation and another Catalog update for a different SAU is required, then this other Catalog update will have to wait for a short time before its own index can be updated. This can be noticeable when a lot of SAUs need to be allocated all within a very short time; and while the Disk Pool will try to be as efficient as possible when handling multiple updates for multiple SAU, there is an additional overhead while the Catalog is updated for each new allocation before the I/O written to the SAU is considered complete. This can, in extreme cases, result in unexpected I/O latency during periods of significant SAU allocations.

Therefore, we recommend that the Catalog be located on the fastest disk possible within the Disk Pool. As of SANsymphony 10.0 PSP9, the location of the catalog will be proactively maintained per Disk Pool to be located on the fastest storage.

DataCore recommends therefore that all Disk Pools have a minimum of 2 physical disks in tier 1 also used for storing the primary and secondary Disk Pool Catalogs and that these physical disks are as fast as possible.

As the Catalog is located within the first 1GB of the physical disk used to store it and as there is a minimum Disk Pool requirement of any physical disk to have enough free space to allocate at least one SAU, it is required that no physical disk be smaller than 2GB in size; 1GB for the Catalog itself and 1GB for the largest SAU possible within the Disk Pool (see previous section on Storage Allocation Unit size in this chapter).

Where is the Catalog Located?

The Catalog is always stored within 1GB of the start of a physical disk's LBA space, and its location depends on the release of SANsymphony.

In all releases, there is only ever a maximum of two copies of the Catalog in a Disk Pool at any time.

SANsymphony 10.0 PSP9 and later

When creating a new Disk Pool, the catalog is always located on the first physical disk added. If a second physical disk is added to the Disk Pool, then a backup copy of the Catalog is stored on that second physical disk – the Catalog on the first physical disk is then considered the primary copy. If subsequent disks are added to the pool, then the Catalog(s) may be moved to locate the Catalog(s) on the smallest disk(s) in the lowest numbered tier in the pool.

Before 10.0 PSP9

When creating a new Disk Pool, the catalog is always located on the first physical disk added. If a second physical disk is added to the Disk Pool, then a backup copy of the Catalog is stored on that second physical disk – the Catalog on the first physical disk is then considered as the primary copy. Tier assignment of the physical disk does not influence on where the Catalog will be stored. Any further physical disks added to the Disk Pool will not be used to store further copies.

Catalog Location and Pool Disk Mirrors

If the physical disk that holds the primary Catalog is mirrored within the Disk Pool, then the physical disk used as the mirror will now hold the backup Catalog. If the backup Catalog was previously on another physical disk in the Disk Pool before the mirroring took place, then then this other (non-mirrored) physical disk will no longer hold the backup Catalog. Also, refer to the Mirroring Pool Disks documentation for more information.

How the Catalog Location is Managed during Disk Decommissioning

If the physical disk that holds the primary copy of the Catalog is removed then the backup copy on the remaining second physical disk in the Disk Pool will be automatically 'promoted' to be the primary Catalog and, if available, a backup copy will be written to the ‘next available’ physical disk in the lowest tier added to the Disk Pool. If the physical disk that holds the backup copy of the Disk Pool is removed, then a new backup copy of the Catalog will be written to the 'next available' physical disk in the lowest tier in the Disk Pool. The location of the primary copy remains unchanged.

The system always tries to keep all copies of the Catalog at the lowest available tier number, usually tier 1. A user cannot move the Catalog to a physical disk of their choice in a Disk Pool. Also, refer to Removing Physical Disks documentation for more information.

How the Catalog Location is Managed during Physical Disk I/O Failures

If there is an I/O error when trying to update or read from the primary Catalog, then the backup Catalog will become the new primary catalog and if there is another physical disk available then that will now become the new backup catalog location according to the rules above.

Storage Allocation Unit Size

The SAU size is chosen at the time the Disk Pool is created and cannot be changed - which is why it is not always easy to apply some of these best practices to existing Disk Pools.

Each SAU represents several contiguous Logical Block Addresses (LBA) equal to its size and, once allocated, will be used for further reads and writes within the LBA range it represents to a Virtual Disk's storage source. Any time a write I/O is sent by the Host to an LBA not able to be allocated within existing SAUs for that Virtual Disk a new SAU is allocated by the Disk Pool.

The amount of space taken in the Disk Pool's Catalog (see previous section) for each allocated SAU is the same regardless of the size of the SAU that was chosen when the Disk Pool was created. The Catalog has a maximum size, which means that the larger the SAU size chosen, the larger the amount of physical disk that can be added to a Disk Pool.

As each SAU is allocated, the Disk Pool's Catalog is updated which. The smaller the SAU size the more likely it is that any new write I/O will be outside of the LBA range of already allocated SAUs and so the more likely that the Catalog will need to be updated. In the previous section - The Disk Pool Catalog – it is recommended to have the Catalog on the fastest disk possible to be able to complete any Catalog updates as fast as possible, recommendations for SAU sizes largely depend on use cases. Larger SAU sizes tend to have fewer catalog updates but also lead to data concentration on fewer physical providers and less granular space and tiering efficiency. See the below table for some guidance on SAU size selection.

|

SAU Size |

Maximum Pool Size |

Use Cases |

|---|---|---|

|

4 Mega Byte |

32 Tera Byte |

Highly space efficient Highest random IO Legacy Linux/Unix Filesystems Small snapshot pool |

|

8 Mega Byte |

64 Tera Byte |

Data Base Workloads Medium snapshot pool |

|

16 Mega Byte |

128 Tera Byte |

High random IO Large snapshot pool |

|

32 Mega Byte |

256 Tera Byte |

VDI Workloads Transactional File Services |

|

64 Mega Byte |

512 Tera Byte |

Medium random IO |

|

128 Mega Byte |

1 Peta Byte |

General Purpose Generic VMware Generic Hyper-V |

|

256 Mega Byte |

2 Peta Byte |

General File services |

|

512 Mega Byte |

4 Peta Byte |

Low random IO |

|

1 Giga Byte |

8 Peta Byte |

Maximum Capacity High sequential IO Archival storage Large file services |

In addition, the more SAUs there are in a Disk Pool the more work must be done to analyze those SAUs, either for Migration Planning or reclamation within the Disk Pool or for GUI updates. Hence, the larger the SAU the lower the resources required to carry out those tasks.

Its impact on reclamation should also be considered when choosing an SAU size. Previously allocated SAUs are only reclaimed when they consist entirely of “zero” writes, any non-zero write will cause the SAU to remain allocated. The larger the SAU, the higher the likelihood that this is the case.

It is recommended that mirrored Virtual Disks use Disk Pools with the same SAU size for each of their storage sources.

Physical Disks in a Disk Pool

The number of physical disks

The more physical disks there are in a Disk Pool the more I/O can be distributed across them at the same time giving an overall performance gain. Performance increases almost linearly for up to 24 physical drives added, then the gain flattens out. However, price and capacity may be significant determining factors when deciding whether to have many small, fast disks (optimum for performance) or fewer large slower disks (optimum for price).

The number of disks in a Pool should be assigned to satisfy the expected performance requirements with an allowance of 50% (or more) for future growth. It is not uncommon for a Disk Pool that has been sized appropriately to eventually suffer because of increased load over time.

Backend Device Multipathing

SANsymphony uses a generic multipathing solution to access Fibre-Channel backends. This system does not support ALUA for path management and operates in a simple failover-only approach with the option to select a preferred path to return to after a path failure is corrected. With more than 2 paths per device, this can lead to extended failover times and eventually pools failing temporarily. It is recommended to not expose more than 2 paths to a backend device to guarantee a failover within the timeout period.

If ALUA to the backend is required for Fibre-Channel attached backend devices a system can be configured to use non-DataCore Fibre-Channel drivers with MPIO and ALUA support. Path management and monitoring then need to be performed with appropriate 3rd party tools.

For iSCSI attached backends, SANsymphony uses the built-in iSCSI initiator from the Windows OS and relies on the Windows MPIO framework for path failover. This allows round- robin access and ALUA for non-SMPA pools.

SMPA pools require the multipathing to operate in Failover-Only mode, whether iSCSI or a 3rd party Fibre-Channel connection is in use.

Configuring RAID sets in a Disk Pool

Refer to RAID Controllers and Storage Arrays for more information.

Auto-Tiering Considerations

Whenever write I/O from a Host causes the Disk Pool to allocate a new SAU, it will always be from the highest performance / lowest numbered available tier as listed in the Virtual Disk's tier affinity unless there is no space available, in which case the allocation will occur on the 'next' highest tier and so on. The allocated SAU will then only move to a lower tier if the SAU's data temperature drops sufficiently. This can mean that higher tiers in a Disk Pool always end up being full, forcing further new allocations to lower Tiers unnecessarily.

New allocations when there is no space in a tier that matches the Virtual Disk’s Storage Profile will instead go to tiers that are out-of-affinity. Allocations that are outside of the set tiers for a Virtual Disk will only migrate when they can move back to a tier that is within affinity. Until PSP15 no migrations due to heat, or in tier re-balancing will occur until that has happened. With PSP15 a fair migration queuing has been introduced to allow faster and better reaction of the migration plan to user and access-driven changes. The system now has several concurrent migration buckets which are permanently exercised in a fair mode. The buckets are for:

- Affinity Migrations

- Encryption Migrations

- Config Settings Migrations

- Decryption Migrations

- Temperature Migrations

- Rebalancing Migrations

Use the Disk Pool's 'Preserve space for new allocations' setting to ensure that a Disk Pool will always try to move any previously allocated, low-temperature SAUs down to the lower tiers without having to rely on temperature migrations alone. DataCore recommends initially setting this value to 20% and then adjust according to your IO patterns.

All tiers in a Disk Pool should be populated, there should not be any tiers that also do not have physical disks assigned. Also, refer to Preserving Tier Space for New Allocations.

Summary of Recommendations for Disk Pools

The Disk Pool Catalog

- DataCore recommends all new Disk Pools have 2 physical disks in tier 1 used for storing the primary and secondary Disk Pool Catalogs and that these physical disks are as fast as possible.

Storage Allocation Unit Size

- Use an SAU size according to your intended use case and the target size of the pool. Pools for snapshots and legacy Unix/Linux filesystems may benefit in efficiency from smaller SAU sizes.

The Number of Physical Disks in a Disk Pool

- The more physical disks there are in a Disk Pool the more I/O that can be distributed across them at the same time giving an overall performance gain almost linear for up to 24 disks.

Configuring RAID Sets for Use in a Disk Pool

- The information on how to configure RAID sets for use in a Disk Pool is covered in the chapter 'RAID Controllers and Storage Arrays'.

Auto-Tiering Considerations

- Use the Disk Pool's 'Preserve space for new allocations' setting to ensure that a Disk Pool will always try to move any previously allocated, low-temperature SAUs down to the lower tiers without having to rely on temperature migrations alone. DataCore recommends initially setting this value to 20% and adjusting it according to your IO patterns, after a period.

Replication Settings

Data Compression

When enabled, the data is not compressed while it is in the buffer but within the TCP/IP stream as it is being sent to the remote DataCore Server. This may help increase potential throughput sent to the remote DataCore Server where the link between the source and destination servers is limited or a bottleneck. It is difficult to know for certain if the extra time needed for the data to be compressed (and then decompressed on the remote DataCore Server) will result in quicker replication transfers compared to no Data Compression being used at all. For replication links at 10GBit or faster line speed, it usually takes longer to compress/decompress than to transmit the raw data sets.

A simple comparison test should be made after a reasonable period by disabling compression temporarily and observing what (if any) differences there are in transfer rates or replication time lags. Refer to Data Compression for more information.

Any third-party, network-based compression tool can be used to replace or add additional compression functionality between the links used to transfer the replication data between the local and remote DataCore Servers, again comparative testing is advised. Also, refer to the Replication for more information.

Transfer Priorities

Use the Replication Transfer Priorities setting - configured as part of a Virtual Disk’s storage profile - to ensure the Replication data for the most important Virtual Disks are sent more quickly than others within the same Server Group. Refer to ‘Replication Transfer Priority’ for more information.

The Replication Buffer

The location of the Replication buffer will determine the speed that the replication process can perform its three basic operations:

- Creating the Replication data (write).

- Sending the Replication data to the remote DataCore Server (read).

- Deleting the Replication data (write) from the buffer once it has been processed successfully by the remote DataCore Server.

Therefore, the disk device that holds the Replication buffer should be able to manage at least 2x the write throughput for all replicated Virtual Disks combined. If the disk device used to hold the Replication buffer is too slow it may not be able to empty fast enough (to be able to accommodate new Replication data). This will result in a full buffer and an overall increase in the replication time lag (or latency) on the Replication Source DataCore Server.