VMware ESXi Configuration Guide (Formerly Known as FAQ 1556)

This topic includes:

VMware ESXi Compatibility Lists

VMware Path Selection Policies (PSP)

Known Issues in VMware ESXi Configuration Guide

Overview

This guide provides configuration settings and considerations for Hosts running VMware ESXi with SANsymphony.

Basic VMware storage administration skills are assumed including how to connect to iSCSI and Fibre Channel target ports and the discovering, mounting, and formatting of disk devices.

Earlier releases of ESXi may have used different settings than the ones listed here, therefore if when upgrading an ESXi host there are previously configured, DataCore specific settings that are no longer listed, leave the original setting’s value as it was.

Refer to DataCore Software and VMware's Hardware Compatibility List (HCL) for more information.

Change Summary

Refer to DataCore FAQ 838 for the latest version of the FAQ and see Previous Changes section that lists the earlier changes made to this FAQ.

Changes since June 2023

| Section(s) | Content Changes |

|---|---|

| Known Issues - DataCore Servers running in Virtual Machines (HCI) |

Added Affects ESXi 8.x and 7.x DataCore Servers running in ESXi Virtual Machines may experience unexpected mirror IO failure causing loss of access for some or all paths to a DataCore Virtual Disk This problem has been reported as resolved in the following VMware Knowledge base article: “3rd party Hyper Converged Infrastructure setups experience a soft lock up and goes unresponsive indefinitely” Refer to the Knowledge Base article for more information. However, DataCore working with VMware discovered that the problem still existed in the version listed in the article (i.e. ESXi 7.0.3i) and that it was not until ESXi 7.0.3o the problem had been fixed. DataCore recommends a minimum ESXi version of:

This problem does not apply to DataCore Servers running in ‘physical’ Windows servers or when running in non-VMware Virtual Machines (e.g. Hyper-V). |

| Known Issues - Fibre Channel Adaptors |

Updated Hosts with Fibre Channel HBAs that are FC-NVME capable may report their storage adapters twice in ESXi and be unable to discover SANsymphony Virtual Disks served to them FC-NVME is not supported for SANsymphony Virtual Disk mappings. Turn off the FC-NVME feature on the ESXi host’s HBA driver by using one of the following commands: Qlogic HBAs: esxcfg-module -s 'ql2xnvmesupport=0' qlnativefc Emulex HBAs: esxcli system module parameters set -m lpfc -p lpfc_enable_fc4_type=1 A reboot of the ESXi host is required in both cases. See Also: |

VMware ESXi Compatibility Lists

ESXi Software Versions

|

ESXi |

ALUA Only |

|---|---|

|

6.7 |

A minimum requirement of SANsymphony 10.0 PSP 10 Update 1 |

|

7.x |

A minimum requirement of SANsymphony 10.0 PSP 10 Update 1 |

|

8.0 |

A minimum requirement of SANsymphony 10.0 PSP 15 Update 3 |

- Fibre Channel and iSCSI front end port connection types are both qualified.

- iSER (iSCSI Extensions for RDMA) is 'not supported'.

- ESXi Hosts configured to not use ALUA are 'not qualified'.

ESXi Path Selection Policies (PSP)

|

ESXi |

MRU |

Fixed |

Round Robin |

|---|---|---|---|

|

6.7 |

Not Qualified |

Qualified |

Qualified |

|

7.x |

Not Qualified |

Not Qualified |

Qualified |

|

8.0 |

Not Qualified |

Qualified |

Qualified |

VMware vSphere APIs: Array Integration (VAAI)

|

ESXi |

|

|---|---|

|

6.7 |

Qualified |

|

7.x |

Qualified |

|

8.0 |

Qualified |

Only the following VAAI-specific commands are supported:

- Atomic Test & Set (ATS)

- XCOPY (Extended Copy)

- Write Same (Zero)

- UNMAP (TRIM)

vSphere Metro Storage Clusters (vMSC)

|

ESXi |

ALUA Only |

|---|---|

|

6.7 |

Qualified |

|

7.x |

Qualified |

|

8.0 |

Qualified |

Virtual disks used for VMSC:

- Virtual disks that have been upgraded to VMFS5 or later from previous VMFS versions are not supported.

- ESXi Hosts configured to not use ALUA are 'not qualified'.

VMware VASA (VVOL)

|

VASA |

|

|---|---|

|

2.x |

A minimum requirement of SANsymphony 10.0 PSP 10 Update 1 |

|

3.0 |

A minimum requirement of SANsymphony 10.0 PSP 15 U3 |

Refer to 'Getting Started with the DataCore VASA Provider'.

VMware Site Recovery Manager (SRM)

|

SRM Version |

DataCore SRA Version |

|---|---|

|

8.1 – 8.3 (Windows) |

2.0 |

|

8.2 – 8.7 (Photon) |

3.0 |

Requires the 'DataCoreSANsymphony Storage Replication Adapter (SRA)’ refer to the release notes from https://datacore.custhelp.com/app/downloads/downloads.

Storage IO Control

|

ESXi |

|

|---|---|

|

6.7 |

Qualified |

|

7.x |

Qualified |

|

8.0 |

Qualified |

Clustered VMDK

| ESXi | ALUA Only |

|---|---|

| 8.0 | Qualified |

- SMPA - Shared pool from SANsymphony is not supported for clustered VMDK.

- Fibre Channel front-end port connection type is only qualified due to VMware’s limitations of clustered VMDK.

- VMware requirements in ESXi Version 7 and 8 are the same. This is valid for limitations as well.

Notes on 'Qualified, 'Not Qualified' and 'Not Supported

Qualified

This combination has been tested by DataCore and all the host-specific settings listed in this document applied using non-mirrored, mirrored, and dual virtual disks.

Not Qualified

This combination has not yet been tested by DataCore using Mirrored or Dual virtual disk types. DataCore cannot guarantee 'high availability' (failover/failback, continued access etc.) even if the host-specific settings listed in this document are applied. Self-qualification may be possible please see Technical Support FAQ #1506

Mirrored or Dual virtual disk types are configured at the user's own risk; however, any problems that are encountered while using VMware versions that are 'Not Qualified' will still get root-cause analysis.

Non-mirrored virtual disks are always considered 'Qualified' - even for 'Not Qualified' combinations of VMware/SANsymphony.

Not Supported

This combination has either failed 'high availability' testing by DataCore using Mirrored or Dual virtual disk types; or the operating System's own requirements/limitations (e.g., age, specific hardware requirements) make it impractical to test. DataCore will not guarantee 'high availability' (failover/failback, continued access etc.) even if the host-specific settings listed in this document are applied. Mirrored or Dual virtual disk types are configured at the user's own risk. Self-qualification is not possible.

Mirrored or Dual virtual disk types are configured at the user's own risk; however, any problems that are encountered while using VMware versions that are 'Not Supported' will get best-effort Technical Support (e.g., to get access to virtual disks) but no root-cause analysis will be done.

Non-mirrored virtual disks are always considered 'Qualified' – even for 'Not Supported' combinations of VMware/SANsymphony.

ESXi Versions that are End of Support Life, Availability or Distribution

Self-qualification may be possible for versions that are considered ‘Not Qualified’ by DataCore but only if there is an agreed ‘support contract’ with VMware. Please contact DataCore Technical Support before attempting any self-qualification of ESXi versions that are End of Support Life.

For any problems that are encountered while using VMware versions that are EOSL, EOA or EOD with DataCore Software, only best-effort Technical Support will be performed (e.g., to get access to virtual disks). Root-cause analysis will not be done.

Non-mirrored virtual disks are always considered 'Qualified'.

The DataCore Server Settings

DataCore Servers Running in Virtual Machines

For ESXi 7.x or ESXi 8.x, please refer to the ‘Known Issue’ section.

Refer to the Hyperconverged and Virtual SAN Best Practices Guide for more information.

Operating System Type

When registering the host, choose the 'VMware ESXi' menu option.

Port Roles

Ports that are used to serve virtual disks to hosts should only have the front-end role checked. While it is technically possible to check additional roles on a front-end port (i.e., Mirror and Backend), this may cause unexpected results after stopping the SANsymphony software.

When SANsymphony has been stopped, Front-end and default Mirror Ports will also be 'stopped'. Ports with the back-end role or Mirror Ports that are explicitly configured to use only the Initiator SCSI Mode will remain ‘running’.

Multipathing

The Multipathing Support option should be enabled so that Mirrored virtual disks or Dual virtual disks can be served to hosts from all available DataCore FE ports. Refer to Multipathing Support for more information.

Non-mirrored Virtual Disks and Multipathing

Non-mirrored virtual disks can still be served to multiple hosts and/or multiple host ports from one or more DataCore Server FE ports if required; in this case the host can use its own multipathing software to manage the multiple host paths to the Single virtual disk as if it was a Mirrored or Dual virtual disk.

ALUA Support

The Asymmetrical Logical Unit Access (ALUA) support option should be enabled if required and if Multipathing Support has also been enabled (see above). Refer to the Operating system compatibility table to see which combinations of VMware ESXi and SANsymphony support ALUA. More information on Preferred Servers and Preferred Paths used by the ALUA function can be found on in Appendix A.

Serving Virtual Disks

For the first time

DataCore recommends that before serving any virtual disk for the first time to a host, that all DataCore front-end ports on all DataCore Servers are correctly discovered by the host first.

Then, from within the SANsymphony Console, the virtual disk is marked Online, up to date and that the storage sources have a host access status of Read/Write.

To more than one host port

DataCore virtual disks always have their own unique Network Address Authority (NAA) identifier that a host can use to manage the same virtual disk being served to multiple ports on the same host server and the same virtual disk being served to multiple hosts.

While DataCore cannot guarantee that a disk device's NAA is used by a host's operating system to identify a disk device served to it over different paths generally we have found that it is. And while there is sometimes a convention that all paths by the same disk device should always using the same LUN 'number' to guarantee consistency for device identification, this may not be technically true. Always refer to the host Operating System vendor’s own documentation for advice on this.

DataCore's Software does, however always try to create mappings between the host's ports and the DataCore Server's Front-end (FE) ports for a virtual disk using the same LUN number where it can. The software will first find the next available (lowest) LUN 'number' for the host-DataCore FE mapping combination being applied and will then try to apply that same LUN number for all other mappings that are being attempted when the virtual disk is being served. If any host-DataCore FE port combination being requested at that moment is already using that same LUN number (e.g., if a host has other virtual disks served to it from previous) then the software will find the next available LUN number and apply that to those specific host-DataCore FE mappings only.

Path Selection Policies (PSP) and SATP rules

Refer to VMware Path Selection Policies for PSP and SATP-specific settings.

The VMware ESXi Host Settings

Disk.DiskMaxIOSize

For ESXi 7.0 Updates 2 and 2a or ESXi 6.7 Update 3, refer to the Known Issues section before changing the DiskMaxIOSize value.

DataCore strongly recommends that this be changed from ESXi’s default 32MB to a smaller value of 512KB.

Large I/O requests from an ESXi host will be converted into multiple, smaller I/O requests by SANsymphony. The time extra needed to convert the large I/O into smaller requests and then send/ack them all to back end and mirror paths before finally sending the completion up to the ESXi Host may end up increasing overall latency. The more random the large I/O pattern is the worse this latency can be. Therefore, by making sure that the ESXi host always sends a smaller I/O request will help prevent this additional latency.

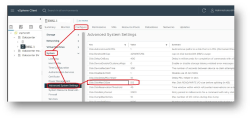

Using vSphere, select the ESXi host and click the Configure tab. From the System options, choose Advanced System Settings and change the Disk.DiskMaxIOSize setting to 512.

See also the following topics

Performance Best Practices for VMware vSphere:

VM Component Protection (VMCP)

DataCore recommends that this setting be enabled.

Enabling VMCP on your ESXi HA cluster allows the cluster to react to “all paths down” and “permanent device loss” conditions by restarting VMs which may speed up the overall VMware recovery process should there be any APD/PDL storage events.

See also VMware Docs for related information.

ISCSI Settings

TCP Ports

By default, port 3260 is used by SANsymphony for iSCSI connections to a DataCore Server.

Software iSCSI Port Binding

DataCore recommend that port binding is not used for any ESXi host connections to DataCore Servers. While host connections will be allowed to login to DataCore front-end ports, iSCSI traffic cannot be automatically re-routed over different subnets in the case of a failure.

Each ESXi interface should be connected to a separate Front-end port on each DataCore Server.

Here is a simple example of an ESXi host with 4 iSCSI Initiators configured to connect to two different DataCore Servers, each with 2 iSCSI Targets.

|

ESXi host 1 (iSCSI Initiator) |

|

DataCore Server 1 (iSCSI Target) |

||

|

192.168.1.1 |

iqn.esx1 |

← ISCSI Network 1 → |

192.168.1.101 |

iqn.dcs1-1 |

|

192.168.2.1 |

iqn.esx1 |

← ISCSI Network 2 → |

192.168.2.101 |

iqn.dcs1-2 |

|

|

DataCore Server 2 (iSCSI Target) |

|||

|

192.168.1.2 |

iqn.esx1 |

← ISCSI Network 1 → |

192.168.1.102 |

iqn.dcs2-1 |

|

192.168.2.2 |

iqn.esx1 |

← ISCSI Network 2 → |

192.168.2.102 |

iqn.dcs2-2 |

See also Considerations for using software iSCSI port binding in ESX/ESXi.

Path Selection Policies and SATP Rules

Refer to VMware Path Selection Policies for PSP and SATP-specific settings.

VMware Path Selection Policies (PSP)

Overview

Which PSP does DataCore Software recommend?

DataCore do not recommend any specific PSP. Choose the PSP that is appropriate for your configuration (refer to the compatibility lists). Depending on which PSP is chosen will affect which ‘Preferred Server’ setting should be used.

When to Modify an Existing PSP?

If the PSP can use the same SATP as the original setting, then nothing more needs to be done. If the PSP does not use the same SATP as before then DataCore recommends that all virtual disks are unserved from the ESXi host before changing the PSP.

How to Modify an Existing SATP Rule?

To change (or modify) the SATP for an existing PSP, DataCore recommends that the virtual disks are first unserved from the ESXi host. The original SATP must then be removed and the new SATP must be applied before re-serving the virtual disks back to the host.

See the following example which shows how to delete and recreate an existing SATP rule.

An example showing how to delete and recreate an existing SATP rule

-

List all SATP ALUA rules and identify those with DataCore Virtual Disk entries:

esxcli storage nmp satp rule list -s VMW_SATP_ALUA

-

Remove the existing/old rule:

esxcli storage nmp satp rule remove -V DataCore -M "Virtual Disk" -s VMW_SATP_ALUA -P VMW_PSP_RR -O iops=10 -c tpgs_on

-

Add the new rule:

esxcli storage nmp satp rule add --vendor="DataCore" --model="Virtual Disk" --satp="VMW_SATP_ALUA" --psp="VMW_PSP_RR" --psp-option="iops=10" --option="disable_action_OnRetryErrors" --claim-option="tpgs_on" --description="DataCore SANsymphony ALUA RR"

Verify the change:

esxcli storage nmp satp rule list -s VMW_SATP_ALUA

Round Robin (RR) PSP

Creating the SATP Rule

Add the following SATP rule using the ESXi host’s console as one command:

esxcli storage nmp satp rule add --vendor="DataCore" --model="Virtual Disk" --satp="VMW_SATP_ALUA" --psp="VMW_PSP_RR" --psp-option="iops=10" --option="disable_action_OnRetryErrors" --claim-option="tpgs_on" --description="DataCore SANsymphony ALUA RR"

Configuration Notes

- Hosts must have the ALUA setting enabled in the SANsymphony console. See Changing multipath or ALUA support settings for hosts.

- The ‘iops=10’ setting is a DataCore recommended value obtained by extensive QA testing that showed performance improvements when compared to the default value (1000). Refer to Knowledge base article to ensure that this setting persists over a reboot.

- The ‘disable_action_OnRetryErrors’ must be explicitly set. Refer to Knowledge base article to ensure that this setting persists over reboots.

- For Round Robin PSP, DataCore recommends configuring hosts with either an explicit DataCore Server name or use the 'Auto select' setting (see ‘Notes on choosing a preferred DataCore Server below).

- If you are trying to modify an existing SATP rule, please follow the instructions on VMware Path Selection Policies.

Choosing a Preferred DataCore Server when using Round Robin PSP

When a host's Preferred Server setting is ‘Auto Select’ the paths to the DataCore Server listed first in the virtual disk’s details tab will be set as ‘Active Optimized’. In the case of a named DataCore Server that server’s paths will be used as the ‘Active Optimized’ server, regardless of the listing order. All paths from to the other DataCore Server(s) will be set as 'Active Non-optimized'.

On using the ‘All’ setting

This will set all paths on all DataCore Servers to be ‘Active Optimized’ and while this may seem, at first, ideal it may end up causing worse performance than expected. When there is a significant distance between the DataCore Servers and the hosts (e.g., across inter-site links between remote data centers) then sending I/O Round Robin to ‘remote’ DataCore Servers may cause noticeable delays and/or latency.

Therefore, testing is advised before using the ‘All’ preferred setting in production to make sure that the I/O speeds between servers is adequate. See Appendix A – Notes on Preferred Server and Preferred Path settings for more details using the ‘All’ setting.

Fixed PSP

Creating the SATP Rule

Add the following SATP rule using the ESXi host’s console:

esxcli storage nmp satp rule add -V DataCore -M 'Virtual Disk' -s VMW_SATP_ALUA -c tpgs_on -P VMW_PSP_FIXED

Configuration Notes

- Hosts must have the ALUA setting enabled in the SANsymphony console. See Changing multipath or ALUA support settings for hosts.

- For Fixed PSP ESXi hosts must use the ‘All’ Preferred DataCore Server setting (see ‘Notes on choosing a preferred DataCore Server …’ below).

- If you are trying to modify an existing SATP rule, refer to VMware Path Selection Policies for instructions.

The ‘All’ Preferred DataCore Server setting when using Fixed PSP

On using the ‘All’ setting

This will set all paths on all DataCore Servers to be ‘Active Optimized’ and while this may seem, at first, ideal it may end up causing worse performance than expected. When there is a significant distance between the DataCore Servers and the hosts (e.g., across inter-site links between remote data centers) then sending I/O Round Robin to ‘remote’ DataCore Servers may cause noticeable delays and/or latency.

Therefore, as the Fixed PSP requires the ‘All’ setting, testing is strongly advised before using Fixed PSP in production to make sure that the I/O speeds between servers is adequate. Refer to Appendix A – Notes on Preferred Server and Preferred Path settings for more details using the ‘All’ setting.

Most Recently Used (MRU) PSP

Creating the SATP Rule

Add the following SATP rule using the ESXi host’s console:

esxcli storage nmp satp rule add -V DataCore -M 'Virtual Disk' -s VMW_SATP_DEFAULT_AA -P VMW_PSP_MRU

Configuration Notes

- Hosts must not have the ALUA setting enabled in the SANsymphony console. Refer to Changing multipath or ALUA support settings for hosts.

- When using MRU, the ‘Preferred DataCore Server’ setting is ignored. Refer to VMware's own documentation on how to configure 'active' paths when using MRU PSP.

- If you are trying to modify an existing SATP rule, refer to VMware Path Selection Policies for instructions.

See also Appendix C: Moving from Most Recently Used to Another PSP

This section contains a step-by-step guide on how to migrate from MRU to one of the other PSP types.

Known Issues in VMware ESXi Configuration Guide

The following is intended to make DataCore Software users aware of any issues that affect performance, access or may give unexpected results under specific conditions when SANsymphony is used in configurations with VMware ESXi hosts.

Some of these Known Issues have been found during DataCore’s own testing, but others have been reported by users. Often, the solutions identified for these issues were not related to DataCore's own products.

DataCore cannot be held responsible for incorrect information regarding another vendor’s products and no assumptions should be made that DataCore has any communication with these other vendors regarding the issues listed here.

We always recommend that the vendors should be contacted directly for more information on anything listed in this section.

For ‘Known Issues’ that apply specifically to DataCore Software’s own products, refer to the relevant DataCore Software Component’s release notes.

This section includes the following topics:

ESXi Host Settings

Affects ESXi 7.x Update 2 or 2a and ESXi 6.7 Update 3

Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency.

As reported in ESXi 7.0 PR 2751564 and ESXi 6.7 PR 2752542

If you lower the value of the DiskMaxIOSize advanced config option, ESXi hosts I/O operations might fail. If you change the DiskMaxIOSize advanced config option to a lower value, I/Os with large block sizes might get incorrectly split and queue at the PSA path. As a result, ESXi hosts I/O operations might time out and fail.

This can result in unexpected IO timeouts on the host, leading to increased latency.

Workaround:

Users that are on ESXi 7.0 Updates 2 or 2a and ESXi 6.7 Update 3 should increase the DiskMaxIOSize setting from the DataCore recommended 512KB to 1024KB as a workaround. Refer to VMware ESXi Host Settings on how to change the ESXi DiskMaxIOSize setting.

Permanent Fix:

ESXi 7.0 Update 2 and 2a

Apply either ESXi 7.0 Update 2c or Update 3 and later.

A description of the fix can be found in the Release Notes.

ESXi 6.7 Update 3

A fix is available in Patch Release ESXi670-202111001 or later.

A description of the fix can be found in the Release Notes.

High Availability

Affects ESXi 6.x and 7.x

Hosts may see premature APD events during fail-over when a DataCore Server is stopped or shutdown if the SATP ‘action_OnRetryErrors’ default setting is used

The default ‘action_OnRetryErrors’ setting switched between disabled and enabled and back to disabled again for subsequent releases of ESXi 6.x. Therefore, to guarantee expected failover behavior when a DataCore Server is stopped or shutdown on any ESXi 6.x host, DataCore require that the SATP ‘action_OnRetryErrors’ setting is always explicitly set to ‘disabled’ (i.e., off). Also, see VMware Path Selection Policies – Round Robin PSP for more information.

Affects all ESXi Versions

Sharing the same ‘inter-site’ connection for both Front-end (FE) and Mirror (MR) ports may result in loss of access to virtual disks for ESXi hosts if a failure occurs on that shared connection.

Sharing the same physical connection for both FE and MR ports will work as expected while everything is healthy but any kind of failure-event over the ‘single’ link may cause both MR and FE I/O to fail at the same time. This will cause virtual disks to be unexpectedly inaccessible to hosts even though there is technically still an available I/O path to one of the DataCore Servers.

This is not a DataCore issue per se as the correct SCSI notification is sent back to the ESXi hosts (LUN_NOT_AVAILABLE) to inform it that a path to the virtual disk is no longer available. However, the ESXi host will ignore this SCSI response and continue to try to access the virtual disk on a path reported as either 'Permanent Device Loss' (PDL) or 'All-Paths- Down' (APD) and the host will not attempt any 'failover' (HA) or ‘move’ (Fault Tolerance) so will lose access to the virtual disk.

Because of this ESXi behaviour, DataCore cannot guarantee failover when hosts are being served virtual disks (FE I/O) over the same physical link(s) that are also using for MR I/O and recommend that at least two, physically separate links are used; one for MR I/O and the other for FE I/O.

Affects ESXi 6.7

Failover/Failback may take significantly longer than expected.

Users have reported that before ESXi 6.7, Patch Release ESXi-6.7.0-20180804001 (or later) was applied ESXi failover could take more than 5 minutes.

DataCore recommends to apply the most up-to-date patches to your Hosts.

Fibre Channel Adaptors

Affects all ESXi versions

Hosts with Fibre Channel HBAs that are FC-NVME capable may report their storage adapters twice in ESXi and be unable to discover SANsymphony Virtual Disks served to them

FC-NVME is not supported for SANsymphony Virtual Disk mappings. Turn off the FC-NVME feature on the ESXi host’s HBA driver by using one of the following commands:

Qlogic HBAs:

esxcfg-module -s 'ql2xnvmesupport=0' qlnativefc

Emulex HBAs:

esxcli system module parameters set -m lpfc -p lpfc_enable_fc4_type=1

A reboot of the ESXi host is required in both cases.

Affects ESXi 7.x and 6.7

Hosts with QLogic HBAs connected to DataCore front-end (FE) ports remain ‘logged out’

Any event that occurs which causes a fibre channel port to log out (e.g. after stopping and starting a DataCore Server) may result in the ESXi host logs reporting many ‘ADISC failure’ messages, preventing the port from logging in. Manually re-initializing the DataCore FE port may workaround the problem. For ESXi 7.x, this affects all ESXi qlnative fc driver versions between 4.1.34.0 and 4.1.35.0.

For ESXi 6.7 the affected versions are 3.1.36.0.

DataCore recommends a minimum qlnativefc driver version of 4.1.35.0 for ESXi 7.x or 3.1.65.0 for ESXi 6.7.

Affects ESXi 7.0 Update 2

Increased read latency of Hosts with QLogic 16Gb Fibre Channel adapters using the qlnativefc driver under certain conditions

Refer to the Networking Issues section for more information.

Affects ESXi 6.7

When using QLogic's Dual-Port, 10Gbps Ethernet-to-PCIe Converged Network Adaptor

When used in CISCO UCS solutions, disable both the adaptor's ‘BIOS’ settings and 'Select a LUN to Boot from' option to avoid misleading and unexpected ‘disconnection-type’ messages reported by the DataCore Server Front End ports during a reboot of hosts sharing these adaptors.

ISCSI Connections

Affects all ESXi Versions

ESXi hosts may experience degraded IO performance when Delayed ACK is 'enabled' on ESXi’s iSCSI initiator

For more specific information and how to disable the 'Delayed ACK' feature on ESXi hosts: Refer to the section ‘Configuring Delayed ACK in ESXi’ from VMware’s own knowledge base article KB1002598 . Note that a reboot of the ESXi host will be required.

Native Drive Sector Size Considerations

Affects all ESXi Versions

Support statement for 512e and 4K Native drives for VMware vSphere and vSAN

Refer to the Knowledge base article KB2091600 for more information.

Also see the section ‘Device Sector Formats’ in VMware’s own vSphere Storage guide for ESXi 8.0 for more limitations of 4K enabled storage devices. Note that it is possible to create 4KB virtual disks from a 512B configured Disk Pool.

Refer to the 4 KB Sector Support documentation for more information.

(Un)Serving Virtual Disks

Affects all ESXi Versions

ESXi hosts need to perform a ‘manual’ rescan whenever virtual disks are unserved

Without this rescan, ESXi hosts will continue to send SCSI requests to the DataCore Servers for these, now unserved, virtual disks. The DataCore Servers will respond appropriately – i.e. with an ILLEGAL_REQUEST – but in extreme cases, e.g. when large numbers of virtual disks are suddenly unserved, or where one or more virtual disks have been unserved from large numbers of ESXi hosts, the amount of continual SCSI responses generated between the ESXi hosts and the DataCore Servers can significantly interfere with normal IO on the Front End Ports thereby impacting overall performance for any (other) host using the same front-end ports that the virtual disks were served from.

Refer to the Knowledge base KB articles 2004605 and 1003988.

VAAI

Affects ESXi 8.x, 7.x, and 6.7

Under ‘heavy’ load the VMFS heartbeat may fail with a 'false' ATS miscompare message.

Previously, the ESXi VMFS 'heartbeat' used normal 'SCSI reads and writes' to perform its function. Starting from ESXi 6.0, the heartbeat method was changed to use ESXi's VAAI ATS commands directly to the storage array (i.e., the DataCore Server). DataCore Server do not require (and thus do not support) these ATS commands. Therefore, it is recommended to disable the VAAI ATS heartbeat setting.

Refer to the Knowledge Base article KB2113956.

If ESXi hosts are connected to other storage arrays contact VMware to see if it is safe to disable this setting for these arrays.

VMotion

Affects ESXi 6.7

VMs get corrupted on vVOL datastores after vMotion

When a VM residing on a vVOL datastore is migrated using vMotion to another host by either DRS or manually, and if the VM has one or more of the following features enabled:

- CBT

- VFRC

- IOFilter

- VM Encryption

A corruption of data/backups/replicas and/or performance degradation is experienced after vMotion. Refer to the Knowledge base article KB55800 for more information.

vSphere

Affects ESXi 7.x and 6.7

Cannot extend datastore using VMware’s vCenter

If a SANsymphony virtual disk served to more than one ESXi host is not using the same LUN on all front-end paths for all hosts and then has its logical size extended, vSphere may not be able to display the LUN in its UI to then expand the VMware datastore. This following VMware article provides steps to work around the issue:

Refer to the Knowledge base article KB1011754 for more information.

While SANsymphony will always attempt to match a LUN on all hosts for the same virtual disk, in some cases it is not always possible to do so – e.g. If the LUN is being used by a an already-mapped virtual disk. This has no functional impact on SANsymphony. Also, see the Serving virtual disks section- To more than one host port.

Microsoft Clusters

Affects ESXi 7.x and 6.7

The SCSI-3 Persistent Reserve tests fail for Windows 2012 Microsoft Clusters running in VMware ESXi Virtual Machines.

This is expected. Refer to the Knowledge base article KB1037959 for more information.

Specifically read the 'additional notes' (under the section 'VMware vSphere support for running Microsoft clustered configurations').

Affects ESXi All versions

ESXi/ESX hosts with visibility to RDM LUNs being used by MSCS nodes with RDMs may take a long time to start or during LUN rescan.

Refer to the Knowledge base article KB1016106 for more information.

DataCore Servers Running in Virtual Machines (HCI)

Affects ESXi 8.x and 7.x

DataCore Servers running in ESXi Virtual Machines may experience unexpected mirror IO failure causing loss of access for some or all paths to a DataCore Virtual Disk

This problem has been reported as resolved in the following VMware Knowledge base article:

“3rd party Hyper Converged Infrastructure setups experience a soft lock up and goes unresponsive indefinitely”

Refer to the Knowledge Base article KB88856 for more information.

However, DataCore working with VMware, it was discovered that the problem still existed in the version listed in the article (i.e. ESXi 7.0.3i) and that it was not until ESXi 7.0.3o that the problem was fixed.

DataCore recommends a minimum ESXi version of:

- ESXi 7.0.3o (build number 22348816) or later, or

- 8.0.2 (build number 22380479) or later

This problem does not apply to DataCore Servers running in ‘physical’ Windows servers or when running in non-VMware Virtual Machines (e.g. Hyper-V).

Appendices

A: Preferred Server and Preferred Path Settings

Without ALUA Enabled

If hosts are registered without ALUA support, the Preferred Server and Preferred Path settings will serve no function. All DataCore Servers and their respective Front End (FE) paths are considered ‘equal’.

It is up to the host’s own Operating System or Failover Software to determine which DataCore Server is its preferred server.

With ALUA Enabled

Setting the Preferred Server to ‘Auto’ (or an explicit DataCore Server), determines the DataCore Server that is designated ‘Active Optimized’ for host IO. The other DataCore Server is designated ‘Active Non-Optimized’.

If for any reason the Storage Source on the preferred DataCore Server becomes unavailable, and the Host Access for the virtual disk is set to Offline or Disabled, then the other DataCore Server will be designated the ‘Active Optimized’ side. The host will be notified by both DataCore Servers that there has been an ALUA state change, forcing the host to re-check the ALUA state of both DataCore Servers and act accordingly.

If the Storage Source on the preferred DataCore Server becomes unavailable but the host Access for the virtual disk remains Read/Write, for example if only the Storage behind the DataCore Server is unavailable but the FE and MR paths are all connected or if the host physically becomes disconnected from the preferred DataCore Server (e.g., Fibre Channel or iSCSI Cable failure) then the ALUA state will not change for the remaining, ‘Active Non- optimized’ side. However, in this case, the DataCore Server will not prevent access to the host, nor will it change the way READ or WRITE IO is handled compared to the ‘Active Optimized’ side, but the host will still register this DataCore Server’s Paths as ‘Active Non-Optimized’ which may (or may not) affect how the host behaves generally.

Refer to Preferred Servers and Preferred Paths sections in Port Connections and Paths.

In the case where the Preferred Server is set to ‘All’, then both of the DataCore Servers are designated ‘Active Optimized’ for host IO.

All IO requests from a host will use all Paths to all DataCore Servers equally, regardless of the distance that the IO must travel to the DataCore Server. For this reason, the ‘All’ setting is not normally recommended. If a host has to send a WRITE IO to a ‘remote’ DataCore Server (where the IO Path is significantly distant compared to the other ‘local’ DataCore Server), then the WAIT times accrued by having to send the IO not only across the SAN to the remote DataCore Server, but for the remote DataCore Server to mirror back to the local DataCore Server and then for the mirror write to be acknowledged from the local DataCore Server to the remote DataCore Server and finally for the acknowledgement to be sent to the host back across the SAN, can be significant.

The benefits of being able to use all Paths to all DataCore Servers for all virtual disks are not always clear cut. Testing is advised.

For Preferred Path settings it is stated in the SANsymphony Help:

A preferred front-end path setting can also be set manually for a particular virtual disk. In this case, the manual setting for a virtual disk overrides the preferred path created by the preferred server setting for the host.

So for example, if the Preferred Server is designated as DataCore Server A and the Preferred Paths are designated as DataCore Server B, then DataCore Server B will be the ‘Active Optimized’ Side not DataCore Server A.

In a two-node Server group there is usually nothing to be gained by making the Preferred Path setting different to the Preferred Server setting and it may also cause confusion when trying to diagnose path problems, or when redesigning your DataCore SAN regarding host IO Paths.

For Server Groups that have three or more DataCore Servers, and where one (or more) of these DataCore Servers shares Mirror Paths between other DataCore Servers setting the Preferred Path makes more sense.

So, for example, DataCore Server A has two mirrored virtual disks, one with DataCore Server B, and one with DataCore Server C and DataCore Server B also has a mirrored virtual disk with DataCore Server C then using just the Preferred Server setting to designate the ‘Active Optimized’ side for the host’s virtual disks becomes more complicated. In this case the Preferred Path setting can be used to override the Preferred Server setting for a much more granular level of control.

B: Reclaiming Storage from Disk Pools

How Much Storage will be Reclaimed?

This is impossible to predict. SANsymphony can only reclaim Storage Allocation Units that have no block-level data on them. If a host writes its data ‘all over’ its own filesystem, rather than contiguously, the amount of storage that can be reclaimed may be significantly less than expected.

Defragmenting data on served virtual disks

A VMFS volume cannot be defragmented. Refer to the Knowledge base article KB1006810.

Notes on SANsymphony's Reclamation Feature

Automatic Reclamation

SANsymphony checks for any ‘zero’ write I/O as it is received by the Disk Pool and keeps track of which block addresses they were sent to. When all the blocks of an allocated SAU have received ‘zero’ write I/O, the storage used by the SAU is then reclaimed. Mirrored and replicated virtual disks will mirror/replicate the ‘zero’ write I/O so that storage can be reclaimed on the mirror/replication destination DataCore Server in the same way.

Manual Reclamation

SANsymphony checks for ‘zero’ block data by sending read I/O to the storage. When all the blocks of an allocated SAU are detected as having ‘zero’ data on them, the storage used by the SAU is then reclaimed.

Mirrored virtual disks will receive the manual reclamation ‘request’ on all DataCore Servers involved in the mirror configuration at the same time and each DataCore Server will read from its own storage. The Manual reclamation ‘request’ is not sent to replication destination DataCore Servers from the source. Replication destinations will need to be manually reclaimed separately.

Reclaiming Storage on the Host using VAAI

When used in conjunction with either VMware’s vmkfstools or their own esxcli command, the ‘Block Delete/SCSI UNMAP’ VAAI primitive will allow ESXi hosts (and their VMs) to trigger SANsymphony's ‘Automatic Reclamation’ function.

Thin Provisioning Block Space Reclamation (VAAI UNMAP) does not work if the volume is not native VMFS-5 (i.e., it is converted from VMFS-3) or the partition table of the LUN was created manually

Refer to the Knowledge base article KB2048466.

Space reclamation priority setting

DataCore recommend using the 'Low' space reclamation priority setting. Any other settings could result in excessive I/O loads being generated on the DataCore Server (with large numbers of SCSI UNMAP commands) and this may then cause unnecessary increases in I/O latency.

Space reclamation granularity setting

DataCore recommend using 1MB.

Reclaiming Storage on the Host Manually

Create a new VMDK using ‘Thick Provisioning Eager Zero’

A suggestion would be to create an appropriately sized virtual disk device (VMDK) where the storage needs to be reclaimed and ‘zero-fill’ it by formatting as a ‘Thick Provisioning Eager Zero’ Hard Disk.

- Using vSphere

Add a new ‘Hard Disk’ to the ESXi Datastore of a size less than or equal to the free space reported by ESXi and choose ‘Disk Provisioning: Thick Provisioned Eager Zero’. Once the creation of the VMDK has completed (and storage has been reclaimed from the Disk Pool), this VMDK can be deleted. - Using the command line

An example:vmkfstools -c [size] -d eagerzeroedthick /vmfs/volumes/[mydummydir]/[mydummy.vmdk]

Where ‘[size]’ is less than or equal to the free space reported by ESXi.

Once the creation of the VMDK has completed (and storage has been reclaimed from the Disk Pool), this VMDK can be deleted.

For Raw Device Mapped virtual disks

Virtual Machines that access SANsymphony virtual disks as RDM devices may be able to generate ‘all-zero’ write I/O patterns using the VM’s operating systems own tools. Examples include ‘sdelete’ for Microsoft Windows VMs or ‘dd’ for UNIX/Linux VMs.

C: Moving from Most Recently Used to Another PSP

- On the DataCore Server: Un-serve all virtual disks from the Host.

- On the Host: Rescan all paths/disk devices so that the virtual disks are cleanly removed.

- On the Host: Remove the SATP Rule.

- On the DataCore Server: Check the ALUA box for the host.

- On the DataCore Server: Re-serve all virtual disks back to the host.

- On the Host: Configure the host for either Fixed or Round Robin Path Selection Policy as appropriate:

Round Robin (RR)

Fixed - On the Host: Rescan all paths/disk devices so that the virtual disks are cleanly rediscovered.

Previous Changes

| Section(s) | Content Changes | Date | ||||||

|---|---|---|---|---|---|---|---|---|

| VMware ESXi compatibility lists - All |

Added ESXi 8.x has been added. |

June 2023 | ||||||

|

VMware ESXi compatibility lists – All |

Removed All references to ESXi 5.x and ESXi 6.x up to and including ESXi 6.5 have been removed as some of these are now considered 'End of General Support' and are also soon to be 'End of Technical Guidance' from VMware.

|

|||||||

| VMware ESXi compatibility lists - All |

Updated Most of the tables have been updated to indicate a minimum SANsymphony requirement where applicable – please check the document for more information. |

|||||||

| VMware Path Selection Policies (PSP) |

Updated The example showing how to modify and remove an existing SATP rule has been updated. |

|||||||

| Known Issues – Fibre Channel adaptors |

Added

FC-NVME is not currently supported for SANsymphony Virtual Disk mappings. To turn off the FC-NVME feature on the ESXi host’s HBA driver use one of the following commands: For Qlogic HBAs: esxcfg-module -s 'ql2xnvmesupport=0' qlnativefc For Emulex HBAs: esxcli system module parameters set -m lpfc -p lpfc_enable_fc4_type=1 A reboot of the ESXi host is required in either case. |

August 2022 | ||||||

| VMware Path Selection Policies – Round Robin |

Updated The command syntax for setting the Round Robin PSP has been amended. Previously, it was: esxcli storage nmp satp rule add -V DataCore -M 'Virtual Disk' -s VMW_SATP_ALUA -P VMW_PSP_RR -O iops=10 -o disable_action_OnRetryErrors -c tpgs_on It had been reported that using the command as stated above was not applying the ‘IOPs’ value consistently, resulting in the default (1000) being used instead. The command switches have now been amended to be more explicit which will resolve this problem. The new command is now: esxcli storage nmp satp rule add --vendor="DataCore" --model="Virtual Disk" |

February 2022 | ||||||

| Known Issues – ESXi host settings |

Updated Affects ESXi 7.0 Updates 2 & 2a and ESXi 6.7 Update 3 Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency ESXi 7.0 - PR 2751564 and ESXi 6.7 - PR 2752542 If you lower the value of the DiskMaxIOSize advanced config option, ESXi hosts I/O operations might fail If you change the DiskMaxIOSize advanced config option to a lower value, I/Os with large block sizes might get incorrectly split and queue at the PSA path. As a result, ESXi hosts I/O operations might time out and fail. This can result in unexpected IO timeouts on the host, leading to increased latency. For ESXi 7.0 Update 2 and 2a: A fix is available in either ESXi 7.0 Update 2c or Update 3. A description of the fix can be found in the Release Notes. For ESXi 6.7 Update 3: A fix is available in Patch Release ESXi670-202111001 A description of the fix can be found in the Release Notes. Users that are on ESXi 7.0 Updates 2 & 2a and ESXi 6.7 Update 3 should increase the DiskMaxIOSize setting from the DataCore recommends 512KB to 1024KB as a workaround. Refer to VMware ESXi Host Settings on how to change the ESXi DiskMaxIOSize setting. |

December 2021 | ||||||

| Known Issues – ESXi host settings |

Updated Affects ESXi 7.0 Updates 2 & 2a and ESXi 6.7 Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency. The DataCore-recommended DiskMaxIOSize setting of 512KB is being ignored by ESXi which can result in unexpected IO timeouts on the host, leading to increased latency. For ESXi 7.0: The problem only occurs in ESXi 7.0 Update 2 and 2a. A fix is available in VMware ESXi 7.0 Update 2c and 3. A description of the fix can be found in the VMware ESXi 7.0 Update 2c Release Notes. PR 2751564: If you lower the value of the DiskMaxIOSize advanced config option, ESXi hosts I/O operations might fail. If you change the DiskMaxIOSize advanced config option to a lower value, I/Os with large block sizes might get incorrectly split and queue at the PSA path. As a result, ESXi hosts I/O operations might time out and fail. ESXi 7.0 Update 2 and 2a users should increase the DataCore recommend DiskMaxIOSize setting from 512KB to 1024KB as a workaround. Refer to VMware ESXi Host Settings for an explanation on changing the DiskMaxIOSize setting. For ESXi 6.7: The problem occurs in ESXi 6.7 Update 3 (earlier versions of ESXi 6.7 appear to not have the issue). Currently, there is no fix available from VMware. Please contact VMware if more information is required. DataCore suggests mentioning the ESXi 7.0 PR number above as a reference. ESXi 6.7 users should increase the DataCore recommend DiskMaxIOSize setting from 512KB to 1024KB as a workaround. Refer to VMware ESXi Host Settings for an explanation on changing the DiskMaxIOSize setting. |

November 2021 | ||||||

| Known Issues – ESXi host settings |

Updated Affects ESXi 7.0 and 6.7 Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency. Using the DataCore-recommended DiskMaxIOSize of 512KB is being ignored by ESXi which results in unexpected IO timeouts on the host, leading to increased latency. For ESXi 7.0: A fix is now available in VMware ESXi 7.0 Update 2c - ESXi_7.0.2-0.20.18426014 PR 2751564: If you lower the value of the DiskMaxIOSize advanced config option, ESXi hosts I/O operations might fail. If you change the DiskMaxIOSize advanced config option to a lower value, I/Os with large block sizes might get incorrectly split and queue at the PSA path. As a result, ESXi hosts I/O operations might time out and fail. See also VMware ESXi 7.0 Update 2c Release Notes For ESXi 6.7: The problem occurs in ESXi 6.7 Update 3 (earlier versions of ESXi 6.7 appear to not have the issue). Currently, a fix is not available from VMware. In this case DataCore recommends that the DiskMaxIOSize setting be increased from 512KB to 1024KB until a fix is available. Refer to VMware ESXi Host Settings for more information on how to change the DiskMaxIOSize setting. |

October 2021 | ||||||

| Known Issues – ESXi host settings |

Updated Affects ESXi 7.0 and 6.7 Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency. There is a problem for ESXi 7.0 Updates 2 and 2a as well as ESXi 6.7 Update 3 when using the DataCore-recommended DiskMaxIOSize of 512KB. DataCore had discovered that this setting was being ignored by ESXi, resulting in unexpected IO timeouts on the host, leading to increased latency. For ESXi 7.0 Update 2: A fix is now available – refer to VMware ESXi 7.0 Update 2c Release Notes. PR 2751564: If you lower the value of the DiskMaxIOSize advanced config option, ESXi hosts I/O operations might fail If you change the DiskMaxIOSize advanced config option to a lower value, I/Os with large block sizes might get incorrectly split and queue at the PSA path. As a result, ESXi hosts I/O operations might time out and fail. For ESXi 6.7 Update 3: DataCore recommends that the DiskMaxIOSize setting be increased from 512KB to 1024KB until a fix is available. Refer to VMware ESXi Host Settings for more information on how to change the DiskMaxIOSize setting. |

September 2021 | ||||||

| Known Issues – ESXi host settings |

Added Affects ESXi 7.0 and 6.7 Using DataCore’s recommended DiskMaxIOSize of 512KB causes unexpected IO timeouts and latency. DataCore have identified a problem with VMware for ESXi 7.0 Updates 2, 2a & 3 and ESXi 6.7 Update 3 when using DataCore’s recommended DiskMaxIOSize of 512KB. In some cases, this setting is being ignored which causes an IO timeout on the ESXi host which leads to increased latency. VMware are aware of the problem but until a fix is released (possibly in ESXi 7.0 P03 and ESXi 6.7 P06) DataCore recommends that this setting be increased from 512KB to 1024KB. Users who wish to discuss this with VMware directly should quote SR21234904207 as there is yet no official VMware KB article that DataCore are aware of. |

August 2021 | ||||||

| Known Issues - QLogic adaptors |

Added Affects ESXi 7.0 Update 2 Read latency of QLogic 16Gb Fibre Channel adapters supported by the qlnativefc driver increases in certain conditions Refer to the "Networking Issues" section in Known Issues for more information. Affects ESXi 7.x and 6.7 Host ports do not log back into DataCore front-end (FE) ports but remain shown as ‘logged out’. When using qlnative fc driver versions between 4.1.34.0 and 4.1.35.0 (ESXi 7.0) or 3.1.36.0 (ESXi 6.7) and an event occurs that causes the DataCore FE port to log out (e.g., stopping and starting a DataCore Server), the host port will may log back into the DataCore FE port once it is available and at the same time the ESXi host logs are continuously flooded with ‘ADISC failure’ messages. Manually re-initializing the DataCore FE port may workaround the problem. DataCore recommends using a minimum qlnative fc driver version of 4.1.35.0 (ESXi 7) or 3.1.65.0 (ESXi 6.7). |

|||||||

| VMware Path Selection Policies – Round Robin |

Updated The command to add the new SATP rule was missing a ‘-o’ switch before the disable_action_OnRetryError setting. Before (incorrect) esxcli storage nmp satp rule add -V DataCore -M 'Virtual Disk' -s VMW_SATP_ALUA -P VMW_PSP_RR -O iops=10 disable_action_OnRetryErrors -c tpgs_on After (corrected) esxcli storage nmp satp rule add -V DataCore -M 'Virtual Disk' -s VMW_SATP_ALUA -P VMW_PSP_RR -O iops=10 -o disable_action_OnRetryErrors -c tpgs_on |

January 2021 | ||||||

| General |

Updated This document has been reviewed for SANsymphony 10.0 PSP 11. No additional settings or configurations are required. |

|||||||

| VMware ESXi Compatibility lists - ESXi operating system versions |

Added ESXi 7.0 is now qualified when using ALUA enabled connections to a DataCore Server.

|

August 2020 | ||||||

| The VMware ESXi Host’s settings – ISCSI Settings - Software iSCSI Port Binding |

Added DataCore recommends that port binding is not used for any ESXi Host connections to DataCore Servers. Refer to ISCSI Settings for more details. |

|||||||

| VMware Path Selection Policies – Overview | Updated

Added more explicit instructions on how to change/edit an existing SATP type. |

|||||||

|

VMware Path Selection Policies – Round Robin/Fixed/Most Recently Used |

Updated Minor edits have been made to the notes to simplify and consolidate repeated information over these three sections. There are no settings changes required or any new information. |

|||||||

|

Known Issues |

Updated Failover/Failback The information below has been simplified for clarity. No new changes have been added. Affects all ESXi versions ESXi hosts may see premature APD events during fail-over when a DataCore Server is stopped or shutdown if the SATP ‘action_OnRetryErrors’ default setting is used. The default ‘action_OnRetryErrors’ setting switched between disabled and enabled and back to disabled again for subsequent releases of ESXi 6.x. Therefore, to guarantee expected failover behavior when a DataCore Server is stopped or shutdown on any ESXi 6.x Host, DataCore require that the SATP ‘action_OnRetryErrors’ setting is always explicitly set to ‘disabled’ (i.e., off). Refer to the VMware Path Selection Policies – Round Robin section for more information. iSCSI connections The information below has been simplified for clarity. No new changes have been added. Affects all ESXi versions connected to SANsymphony 10.0 PSP 6 Update 5 and earlier Multiple iSCSI sessions from the same IQN to a DataCore Front End port is not supported. I.e. iSCSI Initiators that have different IP addresses, but which all share the same IQN when trying to connect to the same DataCore FE ports will not work as expected in SANsymphony versions 10.0 PSP 6 Update 5 and earlier. Only the first IP address to create an iSCSI session login will be accepted. All other iSCSI session login attempts from the same IQN regardless of the IP address will be rejected. |

|||||||

| Known Issues - Serving/un-serving SANsymphony virtual disks |

Added Affects ESXi 6.0 and 6.5 ESXi 5.5, 6.0 and 6.5 do not support 4K enabled virtual disks Refer to the Knowledge base article kB2091600. Also see the section ‘Device Sector Formats’ in VMware’s own vSphere Storage guide for ESXi 6.7 for more limitations of 4K enabled storage devices.

|

April 2020 | ||||||

| Known Issues - QLogic network adaptors |

Updated Affects ESXi 6.x and 5.5 When using QLogic's Dual-Port, 10Gbps Ethernet-to-PCIe Converged Network Adaptor When used in CISCO UCS solutions, disable both the adaptor's ‘BIOS’ settings and 'Select a LUN to Boot from' option to avoid misleading and unexpected ‘disconnection-type’ messages reported by the DataCore Server Front End ports during a reboot of Hosts sharing these adaptors. |

|||||||

| VMware Path Selection Policies - Round Robin (RR) PSP |

Updated The ‘notes’ section has been updated. |

January 2020 | ||||||

|

VMware Path Selection Policies - All (RR, Fixed and MRU PSPs) |

Updated

Information about how to remove an already configured SATP rule (to update/change the existing SATP rule) had been inadvertently removed in previous editions of this document and has now been re-instated. |

|||||||

| Known Issues - Failover/Failback | Updated

Affects ESXi 6.x ESXi hosts using ALUA may see premature APD events during fail-over scenarios when a DataCore Server is stopped/shutdown. A new feature called ‘action_OnRetryErrors’ was introduced in ESXi 6.0. In ESXi 6.0 and then in ESXi 6.5 the setting was by default always set to ‘disable’ (off). However in ESXi 6.7 VMware changed the default setting to ‘enable’ (on) and this caused unexpected failover/failback behaviour when a DataCore Server is stopped/shutdown. So a code change was made in SANsymphony 10.0 PSP 9 (to allow for this ‘enable’ setting to work as expected). Then in ESXi 6.7 Update3 VMware reverted the default setting back ‘disable’ once more. As DataCore can never guarantee which versions of ESXi are being used in any SANsymphony Server Group at any time, this ‘action_OnRetryErrors’ SATP setting needs to be explicitly set (on your ESXi Host) according to the SANsymphony version that is installed. For any ESXi 6.x Hosts the ‘action_OnRetryErrors’ SATP setting should be explicitly set to disabled (off). Refer to the VMware Path Selection Policies – Round Robin section about how to configure the SATP rule for this setting and see the Knowledge base article. |

|||||||

| VMware Path Selection Policies - Most Recently Used (MRU) PSP |

Removed The note about being able to use the ‘default’ SATP rule has been removed as this contradicts the statement that a custom SATP rule must be used for MRU. |

|||||||

| The VMware ESXi Host's settings - DiskMaxIOSize |

Updated Added an ‘Also see’ link. |

December 2019 | ||||||

| Performance Best Practices for VMware vSphere 6.7 |

Updated |

|||||||

| Known Issues – Failover/Failback |

Added Affects ESXi 6.x ESXi hosts using ALUA may see premature APD events during fail-over scenarios when a DataCore Server is stopped/shutdown. A feature called ‘action_OnRetryErrors’ introduced in ESXi 6.0 was set to ‘disable’ (off) by default, this was also the default setting in ESXi 6.5. However, in ESXi 6.7 VMware changed this default value to ‘enable’ (on) which then required a SANsymphony code change (in 10.0 PSP 9). As DataCore cannot guarantee which versions of ESXi are being used in a SANsymphony Server Group, this SATP setting now needs to be explicitly set according to your SANsymphony version to guarantee expected behavior. Refer to the Knowledge Base article KB67006.

Refer to the VMware Path Selection Policies – Round Robin section about how to configure the SATP rule for this setting and see the Knowledge Base article KBKB67006. |

November 2019 | ||||||

|

VMware ESXi Path Selection Policies |

Updated Round Robin Information updated based on the Known Issue – Failover/failback section regarding “ESXi hosts using ALUA may see premature APD events during fail-over scenarios when a DataCore Server is stopped/shutdown.” (See added Known Issue above). |

|||||||

|

Round Robin, Fixed and MRU |

Updated Previously it was stated that DataCore ‘recommends’ that users create custom SATP rules. This has been changed to ‘required’, following the settings listed in the appropriate sections. |

|||||||

| Known Issues – Serving and unserving virtual disks |

Added Affects ESXi 6.5 Virtual machine hangs and issues ESXi host based VSCSI reset Virtual machines with disk size larger than 256GB in VMFS5 and larger than 2.5TB in VMFS6.

See the Knowledge Base article KB2152008. |

October 2019 | ||||||

| General |

Updated This document has been reviewed for SANsymphony 10.0 PSP 9. No additional settings or configurations are required. |

|||||||

| General |

Removed Any information that is specific to ESXi versions 5.0 and 5.1 have been removed as these are considered ‘End of General Support’. See: Knowledge Base article KB2145103. |

|||||||

| Known Issues - VAAI |

Added Affects ESXi 6.x and 5.x Thin Provisioning Block Space Reclamation (VAAI UNMAP) does not work if the volume is not native VMFS-5 (i.e. it is converted from VMFS-3) or the partition table of the LUN was created manually. See the Knowledge Base article KB2048466. |

July 2019 | ||||||

|

The DataCore Server’s settings – Port Roles |

Updated The DataCore Server’s settings – Port Roles |

|||||||

| General |

Removed All information regarding SANsymphony-V 9.x as this version is end of life (EOL). Refer to End of life notifications for DataCore Software products for more information. |

|||||||

|

Appendix B - Reclaiming Storage from Disk Pools |

Updated Defragmenting data on virtual disks For ESXi a VMFS volume cannot be defragmented. Refer to VMware’s own Knowledgebase article: Does fragmentation affect VMFS datastores? |

June 2019 | ||||||

|

The VMware ESXi Host's settings |

Updated Advanced settings – DiskMaxIOSize An explanation has been added on why DataCore recommends that the default value for an ESXi host is changed. |

March 2019 | ||||||

|

Appendix B - Reclaiming Storage from Disk Pools |

Updated

Reclaiming storage on the Host manually This section now has vSphere-specific references for the manual method of creating a new VMDK using ‘Thick Provisioning Eager Zero’. |

|||||||

|

VMware Path Selection Policies – Round Robin PSP |

Updated Creating a custom SATP rule A minor update to the explanation when changing the RR IOPs value. It is now clearer when a change to the rule would or would not be expected to be persistent over reboot of the ESXi Host – see the ‘Notes’ section under the example. |

December 2018 | ||||||

| VMware ESXi compatibility lists - VMware Site Recovery Manager (SRM) |

Updated

ESXi 6.5 is now supported using DataCore’s SANsymphony Storage Replication Adaptor 2.0. ESXi 6.7 is currently not qualified. Please see DataCore’s SANsymphony Storage Replication Adaptor 2.0 release notes from https://datacore.custhelp.com/app/downloads. |

November 2018 | ||||||

| Known Issues - Failover |

Added Affects ESXi 6.7 only Failover/Failback takes significantly longer than expected. Users have reported to DataCore that before applying ESXi 6.7, Patch Release ESXi-6.7.0-20180804001 (or later) failover could take in excess of 5 minutes. DataCore is recommending (as always) to apply the most up-to-date patches to your ESXi operating system. Refer to the Knowledge Base article KB56535. |

October 2018 | ||||||

|

VMware ESXi compatibility lists - ESXi operating system versions |

Added Added to the ‘Notes’ section: iSER (iSCSI Extensions for RDMA) is not supported. |

|||||||

|

VMware ESXi compatibility lists |

Updated VMware VVOL VASA API 2.0

Previously ESXi 5.x was incorrectly listed as VVOL/VASA compatible with 10.0 PSP 4 and later. |

|||||||

See Also

- Clustered VMDK support for WSFC

- Recommendations for using Clustered VMDKs with WSFC

- Requirements for using Clustered VMDKs with WSFC

- Limitations of Clustered VMDK support for WSFC

- Video: Configuring ESXi Hosts in the DataCore Management Console

- Registering Hosts

- Changing Virtual Disk Settings - SCSI Standard Inquiry

- Changing a LUN to Use a Different Path Selection Policy (PSP)

- Changing Multipath or ALUA Support Settings for Hosts

- Moving from Most Recently Used (MRU) to a different PSP

- Space Reclamation Requests from Guest Operating Systems

- Auto-reclamation of unused SAUs

- Reclaiming Unused virtual disk Space in Disk Pools