Monitoring

Explore this Page

- Overview

- Enable Monitoring

- Pool Metrics Exporter

- Stats Exporter Metrics

- CSI Metrics Exporter

- Performance Monitoring Stack

- Accessing Grafana

- I/O Performance Metrics

- Benefits of Monitoring

Overview

Effective monitoring is critical for maintaining the health and performance of storage infrastructure. This document outlines the metrics exposed by various exporters within the Replicated PV Mayastor ecosystem. These metrics enable observability of resource usage, performance trends, and operational statuses, thereby facilitating data-driven troubleshooting and capacity planning.

Depending on the situation, it performs either a full rebuild (restores the entire replica) or a partial rebuild (restores only the changed data). This flexibility ensures fast recovery with minimal disruption to applications.

Enable Monitoring

The DataCore Puls8 monitoring stack is enabled by default when you install DataCore Puls8 using Helm.

If you want to disable monitoring , use the following Helm flag:

This disables the installation of Prometheus, Grafana, and related monitoring components that are part of the DataCore Puls8 monitoring stack.

To collect metrics such as pool usage, volume statistics, and I/O performance, ensure that monitoring is not disabled.

Pool Metrics Exporter

The Pool Metrics Exporter runs as a sidecar container alongside each I/O Engine pod. It exposes Prometheus-compatible pool metrics via the metrics HTTP endpoint on port 9502. These metrics are refreshed every five minutes to reflect recent usage and state information.

Supported Pool Metrics

| Name | Type | Unit | Description |

|---|---|---|---|

|

disk_pool_total_size_bytes |

Gauge | Integer | Total size of the pool in bytes |

|

disk_pool_used_size_bytes |

Used size of the pool in bytes | ||

|

disk_pool_status |

Pool status: 0 = Unknown, 1 = Online, 2 = Degraded, 3 = Faulted | ||

|

disk_pool_committed_size |

Committed size of the pool in bytes |

Sample Pool Metrics Output

# HELP disk_pool_status Status of the disk pool (1 = healthy, 0 = unhealthy)

# TYPE disk_pool_status gauge

disk_pool_status{node="worker-0", name="mayastor-disk-pool"} 1

# HELP disk_pool_total_size_bytes Total size of the disk pool in bytes

# TYPE disk_pool_total_size_bytes gauge

disk_pool_total_size_bytes{node="worker-0", name="mayastor-disk-pool"} 5360320512

# HELP disk_pool_used_size_bytes Used size of the disk pool in bytes

# TYPE disk_pool_used_size_bytes gauge

disk_pool_used_size_bytes{node="worker-0", name="mayastor-disk-pool"} 2147483648

# HELP disk_pool_committed_size_bytes Committed size of the disk pool in bytes

# TYPE disk_pool_committed_size_bytes gauge

disk_pool_committed_size_bytes{node="worker-0", name="mayastor-disk-pool"} 9663676416Stats Exporter Metrics

When eventing is enabled, statistics are collected by the obs-callhome-stats container within the callhome pod. These metrics are exposed on port 9090 at the /stats endpoint.

Supported Statistics Metric

| Name | Type | Unit | Description |

|---|---|---|---|

|

pools_created |

Gauge | Integer | Count of successfully created pools |

|

pools_deleted |

Count of successfully deleted pools | ||

|

volumes_created |

Count of successfully created volumes | ||

|

volumes_deleted |

Count of successfully deleted volumes |

# HELP nexus Nexus stats

# TYPE nexus counter

nexus{action="created"} 21

nexus{action="deleted"} 18

nexus{action="rebuild_ended"} 0

nexus{action="rebuild_started"} 0

# HELP pool Pool stats

# TYPE pool counter

pool{action="created"} 3

pool{action="deleted"} 0

# HELP volume Volume stats

# TYPE volume counter

volume{action="created"} 6

volume{action="deleted"} 15CSI Metrics Exporter

The CSI metrics exporter provides insights into volume-level statistics. These metrics are collected by kubelet and exported for Prometheus monitoring.

Supported Volume Metrics

| Name | Type | Unit | Description |

|---|---|---|---|

|

kubelet_volume_stats_available_bytes |

Gauge | Integer | Usable size of the volume in bytes |

|

kubelet_volume_stats_capacity_bytes |

Total capacity of the volume in bytes | ||

|

kubelet_volume_stats_used_bytes |

Amount of used space in bytes | ||

|

kubelet_volume_stats_inodes |

Total number of inodes | ||

| kubelet_volume_stats_inodes_free | Count of available inodes | ||

| kubelet_volume_stats_inodes_used | Number of inodes used for metadata |

Performance Monitoring Stack

Initially, metrics exporters cached data which might not reflect real-time usage during Prometheus polls. This has been improved by directly querying the IO Engine in sync with the Prometheus polling cycle.

It is recommended to set the Prometheus poll interval to at least 5 minutes.

Accessing Grafana

Grafana provides a visual interface to monitor metrics collected by Prometheus. To access Grafana in your environment, follow these steps:

-

Verify the Grafana Pod is running.

-

Check the Grafana service IP and port.

-

Access Grafana via Port-Forwarding.

- Use port-forwarding to connect to Grafana locally if external access is not available:

- Once port-forwarding is established, Open a browser and visit

http://127.0.0.1:[grafana-forward-port](Example:http://127.0.0.1:8080). - Use the default login credentials:

Username: adminandPassword: admin. - After logging in, the Home page is displayed.

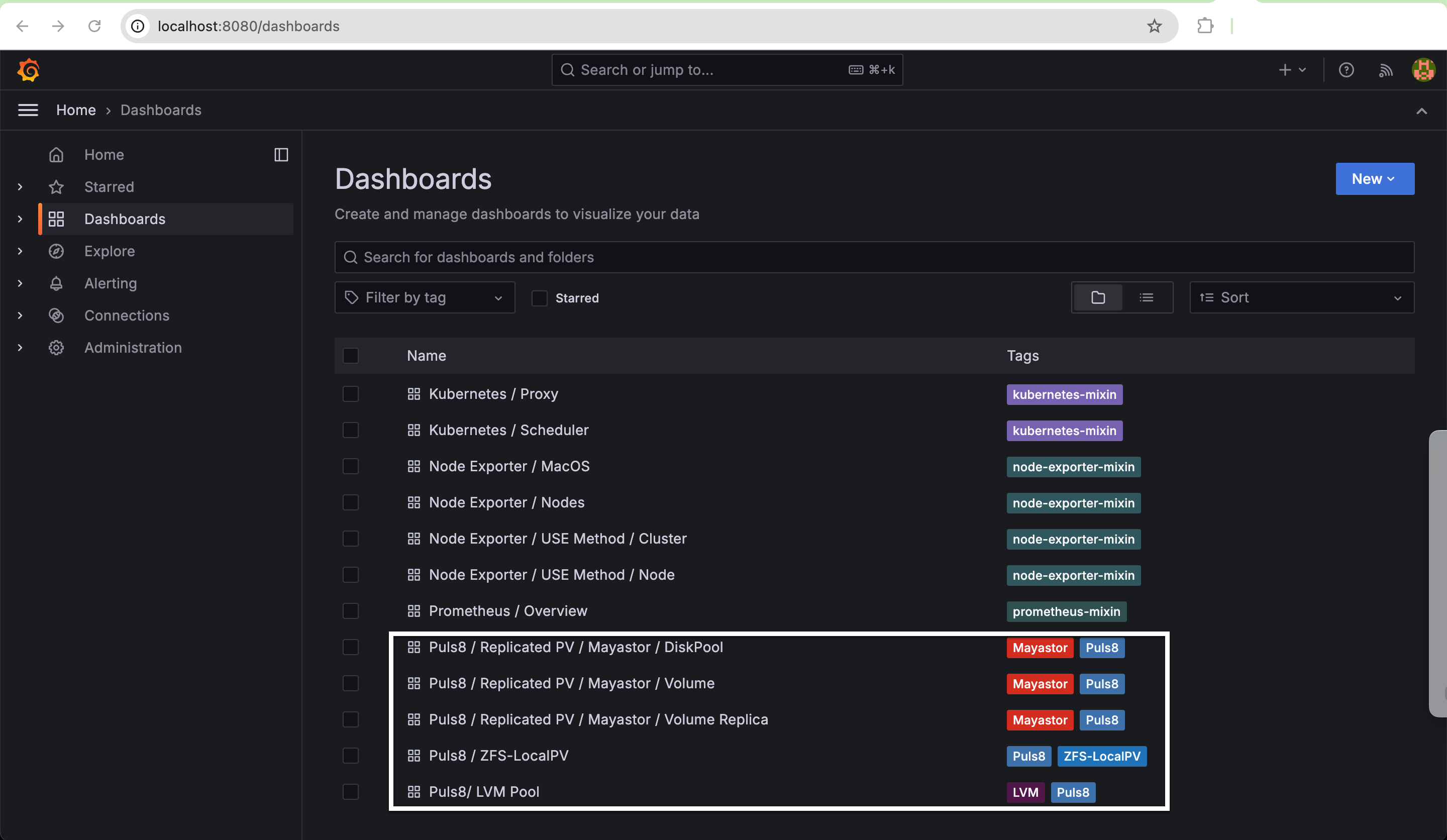

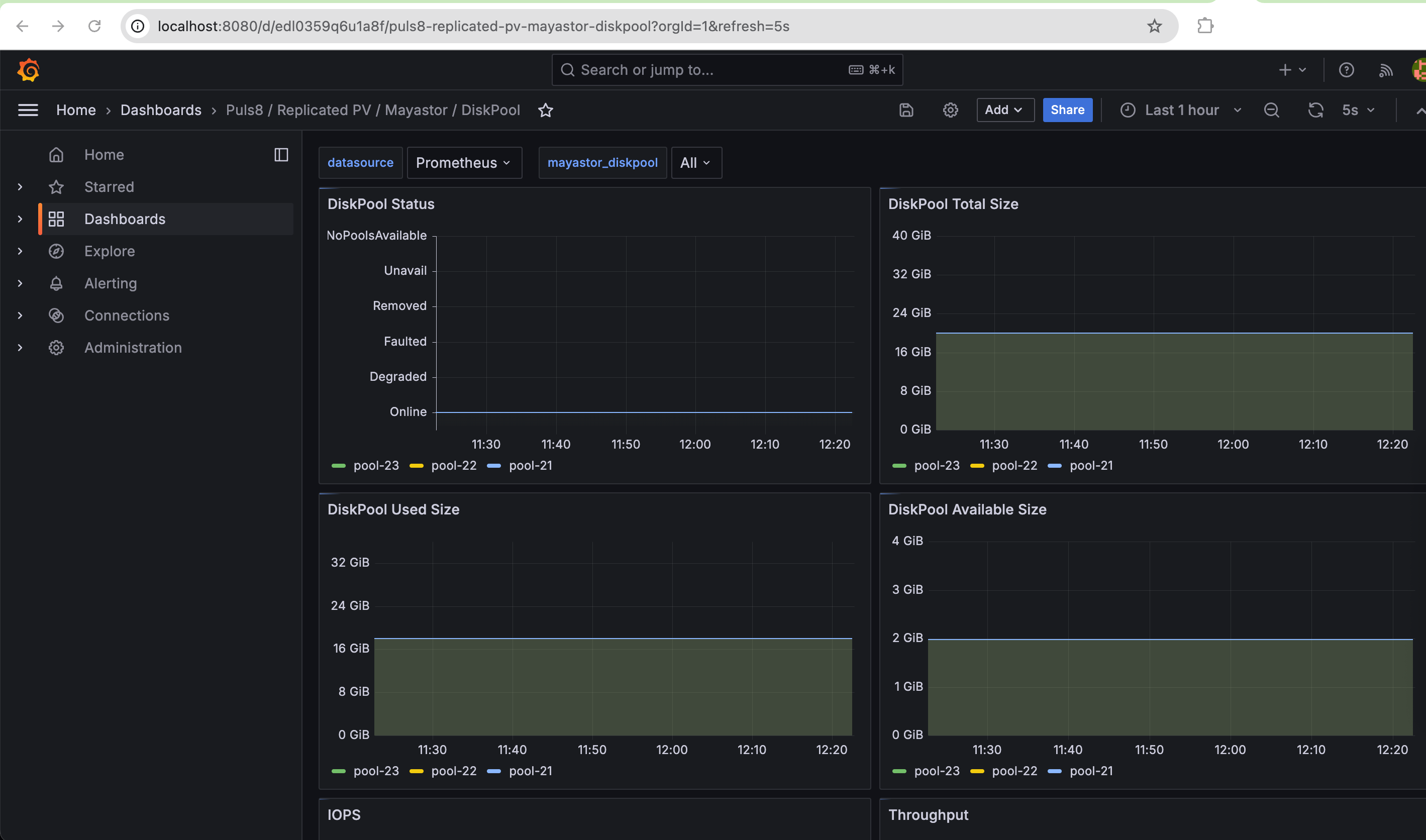

- To view the Puls8 dashboards, click Dashboards on the left-hand panel. For example, if you select

Puls8/Replicated PV/Mayastor/Diskpool, you can view:- Diskpool Status

- Diskpool Total Size

- Diskpool Used Size

- Diskpool Available Size

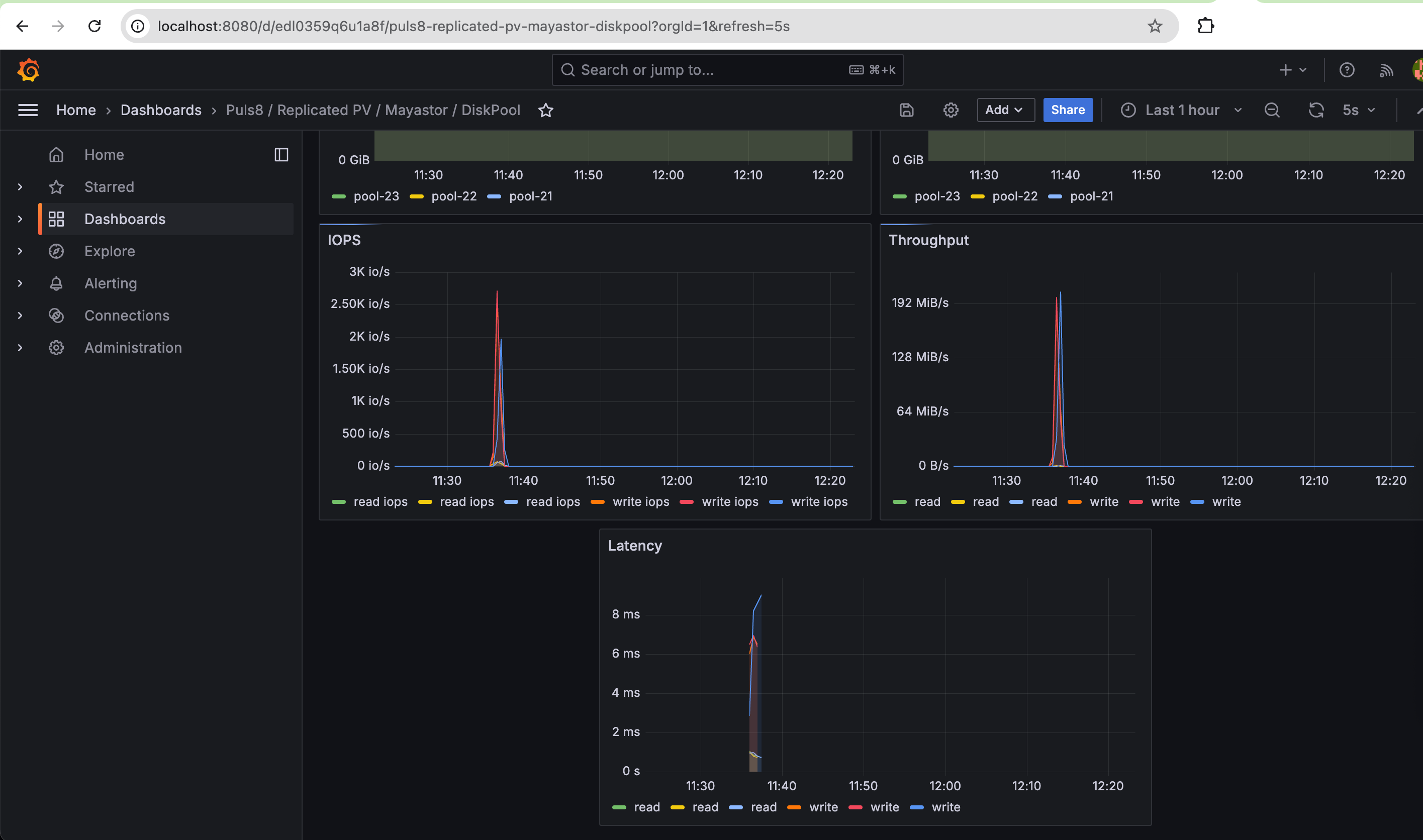

- IOPS

- Throughput

- Latency

CopyConnect to Grafana Locallykubectl port-forward --namespace [NAMESPACE] pods/[grafana-pod-name] [grafana-forward-port]:[grafana-cluster-port]CopyExample: Port Forward the Grafana Service from the Puls8 Namespace to Your Local Port 8080kubectl port-forward svc/puls8-grafana -n puls8 8080:80

I/O Performance Metrics

DiskPool I/O Statistics

| Name | Type | Labels | Unit | Description |

|---|---|---|---|---|

| diskpool_num_read_ops | Gauge |

|

Integer | Number of read operations on the pool |

|

diskpool_bytes_read |

Total bytes read | |||

|

diskpool_num_write_ops |

Number of write operations | |||

| diskpool_bytes_written | Total bytes written | |||

| diskpool_read_latency_us | Aggregate read latency in microseconds | |||

| diskpool_write_latency_us | Aggregate write latency in microseconds |

Replica I/O Statistics

| Name | Type | Labels | Unit | Description |

|---|---|---|---|---|

| replica_num_read_ops | Gauge |

|

Integer | Number of read operations on replica |

|

replica_bytes_read |

Total bytes read on the replica | |||

|

replica_num_write_ops |

Number of write operations | |||

| replica_bytes_written | Total bytes written | |||

| replica_read_latency_us | Read latency in microseconds | |||

| replica_write_latency_us | Write latency in microseconds |

Volume Target I/O Statistics

| Name | Type | Labels | Unit | Description |

|---|---|---|---|---|

| volume_num_read_ops | Gauge |

|

Integer | Number of read operations via volume |

|

volume_bytes_read |

Total bytes read via volume | |||

|

volume_num_write_ops |

Number of write operations via volume | |||

| volume_bytes_written | Total bytes written via volume | |||

| volume_read_latency_us | Read latency in microseconds | |||

| volume_write_latency_us | Write latency in microseconds |

Benefits of Monitoring

- Enables real-time visibility into storage usage, performance, and system health.

- Assists in proactive detection and resolution of issues before they impact workloads.

- Provides historical data for capacity planning and trend analysis.

- Facilitates compliance with SLAs and performance benchmarks.

Learn More