Proxmox: Host Configuration Guide

Explore this Page

- Overview

- Change Summary

- Configuring the Network

- Creating a Cluster

- Adding a Node

- SCSI

- NVMeoF

- SCSI Disk Timeout

- Serving a SANsymphony Virtual Disk to the Proxmox Node

- Creating a Proxmox File-System

- RAW Device Mapping to Virtual Machine

- Restarting the Connection after Rebooting the Proxmox Server

Overview

This guide provides information on the configuration settings and considerations for hosts that are running Proxmox with DataCore SANsymphony.

The basic installation of the nodes must be carried out according to the Proxmox specifications. Refer to the Proxmox installation guide.

The Proxmox host is referred to as "PVE" throughout this guide, consistent with Proxmox terminology.

It is recommended to use the virtio-win-0.1.271 driver version when installing Proxmox for optimal performance and compatibility. Newer virtio-win versions (such as 0.1.285) may cause performance issues.

Version

The guide applies to the following software versions:

- SANsymphony 10.0 PSP18

- Windows Standard Server 2022

- Proxmox version 8.3.2

Change Summary

|

Sections |

Content Changes |

Date |

|---|---|---|

|

NVMeoF |

Added new sections for NVMeoF, NVMe/TCP, NVMe/FC, and NVMe Multipath. |

October 2025 |

Network Design

When designing the PVE server, it should be considered that all functions in the network area are given sufficient performance and redundancy.

This is important in terms of minimizing any impact on the functionality of the environment in productive operations.

The next two points are a recommendation based on the consideration of a high-availability and redundant design.

- Proxmox

- Web -UI = 2 x Gbit as Active / Backup Bond

- Corosync Cluster = 2 x Gbit as 2- Single-Ports, no Bond

- VM /Container = 2x 10/25 Gbit in LACP or Active / Backup Bond (based on existing switch infrastructure).

- SANsymphony

- Frontend Port = 2 x 10 Gbit (iSCSI)

Configuring the Network

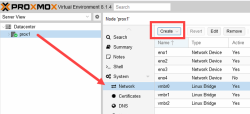

The networks or network configuration is performed in the Proxmox Host (PVE) Graphical User Interface (GUI) under Host > Network.

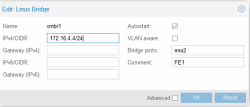

For each Network Interface Card (NIC) that is required for the SANsymphony iSCSI, a "Linux Bridge" vNIC (2 x FrontEnd (FE) with static IP) must be created via the Create button.

- Name: Enter an applicable name. For Example: vmbr[N], where 0 ≤ N ≤ 4094.

- IPv4/CIDR: Enter the IP address / Subnet mask.

- Autostart: Check the box to enable this option.

- Bridge ports: Enter the NIC to be used.

- Comment: It is recommended that a function name is entered here, as this makes it easier to administer the environment.

- Advanced: Select an MTU size to support the Jumbo Frames. The Add the Comment field is displayed to specify the function of the Linux Bridge.

- Select OK to apply the configuration settings.

- Click Reset to clear the existing options selected/ details entered, and to update different details.

The configuration settings applied only become active after clicking the "Apply Configuration" button.

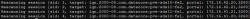

Activating changes via CLI is possible with the command:

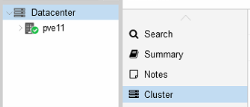

Creating a Cluster

A minimum of three fully functional PVE nodes is required, or alternatively, two nodes plus a quorum device (QDevice). The steps below for creating a cluster do not cover configuration of the quorum device or high availability (HA). For those details, refer to the Proxmox Cluster Manager section on the official Proxmox website.

- Under Datacenter > Cluster, click Create Cluster.

- Enter the cluster name.

- Select a network connection from the drop-down list to serve as the main cluster network (Link 0). The network connection defaults to the IP address resolved via the node’s hostname.

Adding a Node

- Log in to the GUI on an existing cluster node.

- Under Datacenter > Cluster, click the Join Information button displayed at the top.

- Click the Copy Information button. Alternatively, copy the string from the Information field.

- Next, log in to the web interface on the node you want to add.

- Under Datacenter > Cluster, click Join Cluster.

- Fill the Information field with the Join Information text copied earlier. Most settings required for joining the cluster will be filled out automatically.

- For security reasons, enter the cluster password manually.

- Click Join.

The node is added.

SCSI

This section details the configuration, setup, and management of SCSI devices, including iSCSI daemons, multipath configurations, Fibre Channel HBAs, and best practices for integrating SANsymphony virtual disks with Proxmox nodes. Proper SCSI configuration ensures optimal performance, reliability, and high availability of your storage infrastructure.

PVE SCSI/TCP (iSCSI)

The iSCSI function was implemented based on the Proxmox Wiki article, which reflects the current recommendations of the manufacturer. The steps below were useful at the time of creation.

Before setting up iSCSI, it is recommended to read the Proxmox ISCSI installation - Proxmox VE wiki article.

Installing iSCSI Daemon

iSCSI is a widely employed technology that is used to connect to storage servers. Almost all storage vendors support iSCSI. There are also open-source iSCSI target solutions available, which is based on Debian.

To use Debian, you need to install the Open-iSCSI (open-iscsi) package. This is a standard Debian package, however, not installed by default to save the resources.

Changing the iSCSI-Initiator Name on each PVE

The Initiator Name must be unique for each iSCSI initiator. Do NOT duplicate the iSCSI-Initiator Names.

- Edit the iSCSI initiator name in the /etc/iscsi/initiatorname.iscsi file to assign a unique name in a way that the IQN refers to the server and the function. This change makes administration and troubleshooting easier.

- Restart iSCSI to take effect using the following command:

Original: InitiatorName=iqn.1993-08.org.debian:01:bb88f6a25285

Modified: InitiatorName=iqn.1993-08.org.debian:01:<Servername + No.>

Discovering the iSCSI Target to PVE

- Before attaching the SANsymphony iSCSI target, you must discover all the iqn-portname using the following command:

- Attach all the SANsymphony iSCSI targets on each PVE using the following command:

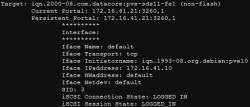

iscsiadm --mode node --targetname iqn.2000-08.com.datacore:pve-sds11-fe1 -p 172.16.41.21 --loginDisplaying the Active Session

Detailed information about the iSCSI connections and the hardware can be displayed with this command:

iSCSI Settings

The iSCSI service does not start automatically by default when the PVE-node boots. Refer to the iSCSI Multipath document for more information.

The /etc/iscsi/iscsid.conf file must change the line so that the initiator starts automatically.

The default 'node.session.timeo.replacement_timeout' is 120 seconds. It is recommended to use a smaller value of 15 seconds instead.

If a port reinitialization is done, it can be that the port is unable to login on its own. In this case, the attempts must be increased:

Restart the service using the following command:

Logging in to the iSCSI Targets on Reboot

For each connected iSCSI target, you need to modify the node.startup parameter in the target to automatic. The target is specified in the

If the connection after reboot the PVE-Server fails

The services must be started with the command:

or

Re-connect all iSCSI targets:

If an error occurs, then first:

iSCSI Multipath

For the installation of Multipath, we recommend the official documentation of Proxmox, which contains comprehensive and up-to-date instructions. Their documentation is a reliable source for exact installation steps and configuration details. Refer to ISCSI Multipath- Proxmox VE.

Installing Multipath Tools

The default installation does not include the 'multipath-tools' package. Use the following commands to install the package:

Creating a Multipath.conf File

After installing the package, create the following multipath configuration file: /etc/multipath.conf.

Refer to the DataCore Linux Host Configuration Guide for the relevant settings and the adjustments for the PVE in the iSCSI Multipath document.

defaults {

user_friendly_names yes

polling_interval 60

find_multipaths "smart"

}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^hd[a-z]"

}

devices {

device {

vendor "DataCore"

product "Virtual Disk"

path_checker tur

prio alua

failback 10

no_path_retry fail

dev_loss_tmo 60

fast_io_fail_tmo 5

rr_min_io_rq 100

path_grouping_policy group_by_prio

}

}Restart Multipath Service to Reload Configuration

Restart the multipath service using the following command to reload the configuration:

SCSI/FC

This section covers the configuration and management of Fibre Channel (FC) connectivity on Proxmox (PVE) hosts using SANsymphony storage. It provides guidance on installing required packages, verifying HBA hardware and drivers, retrieving detailed adapter information, and scanning the SCSI bus for new devices. Proper configuration of FC ensures reliable access to SANsymphony virtual disks, supports high availability, and enables integration with logical volume management (LVM) for Proxmox.

SCSI/FC (PVE-Fiber Channel)

Installing Required Packages

Packages for FC-SAN support

apt-get update

apt-get install scsitools

apt-get install -y sysfsutils

apt-get install lvm2This command updates your package lists and installs the required tools. LVM2 is required for the management of logical disks.

Checking HBA Info and Drivers

Determine the Manufacturer and Model of the HBAs

Get the Vendor and Device IDs for the HBAs installed

Example:

1077 = vendor ID from Qlogic

2031 = device ID

Check if the driver modules are installed

This can be done by searching the list of available modules. (Replace 6.8.12-4-pve with your kernel version in the command below).

Find Kernel version / System information

Check drivers for these HBAs are loaded in the kernel

Reload Module

Getting HBA Information

Simple

You can find more detailed information about the Fiber Channel adapters in the directory /sys/class/fc_host/.

The output returns the device path with PCI address and port number for each port (host).

Detailed

The listed directories contain specific information for each adapter, such as node name (WWN), port name (WWN), type, speed, status, etc.

The easiest way to get detailed information is to use the systool command with the option -v.

Rescan SCSI-Bus

This identifies all SCSI devices, including new LUNs, and integrates them into your environment.

NVMeoF

This section provides guidance for configuring SANsymphony NVMe over Fabrics (NVMeoF) on Proxmox (PVE). NVMeoF supports TCP and Fibre Channel transports. NVMeoF is supported only on Emulex HBA ports.

NVMe/TCP Settings

Installing Required Packages

Install the necessary package for NVMeoF over TCP

Loading NVMe TCP Driver

Load the NVMe/TCP driver to enable NVMeoF connections over TCP

Discovering NVMe Targets

Before connecting, discover all NVMeoF subsystems

Connecting to NVMeoF Targets

Ensure the NQN matches the NVMe target

Configuring NVMe Initiator for Automatic Target Discovery and Connection

Before connecting to NVMeoF targets, configure the NVMe initiator using the /etc/nvme/discovery.conf file. This file lists the targets that the Proxmox host can automatically discover and connect to using NVMeoF commands.The file format is as follows:

Each entry in the file uses the following syntax:

--transport=<trtype> --traddr=<IP-address> --trsvcid=<port> --subsysnqn=<subsystem-NQN>- <trtype> : Transport type (e.g., tcp)

- <IP-address> : IP address of the NVMeoF target

- <port> : Port number (default: 4420 for NVMe/TCP)

- <subsystem-NQN> NVMe Qualified Name of the target subsystem

This configuration allows Proxmox to automatically discover and connect to SANsymphony NVMe targets on reboot.

--transport=tcp --traddr=192.168.1.50 --trsvcid=4420 --subsysnqn=nqn.2014-08.org.nvmexpress:uuid:1234abcdTo view the contents of the discovery file

NVMe/FC Settings

Installing Required Packages

Install FC-related NVMe packages

Loading NVMe/FC Driver

Load NVMe over Fibre Channel driver modules

Checking HBA Info and Drivers (Emulex only)

Determine manufacturer and model

Check if driver modules are loaded

Getting HBA Information

Simple

Detailed

Checking NVMe/FC Initiator Information

Verify that the NVMe initiator is enabled on the host

NVME Initiator Enabled

XRI Dist lpfc0 Total 6144 IO 5894 ELS 250

NVME LPORT lpfc0 WWPN x100000109b1be796 WWNN x200000109b1be796 DID x000002 ONLINE

NVME RPORT WWPN x100000109bd48ea9 WWNN x200000109bd48ea9 DID x000001 TARGET DISCSRVC ONLINE

NVME Statistics

LS: Xmt 00000008c3 Cmpl 00000008c3 Abort 00000001

LS XMIT: Err 00000000 CMPL: xb 000003a1 Err 000003a1

Total FCP Cmpl 00000000189fa9f4 Issue 00000000189fa9f5 OutIO 0000000000000001

abort 000000aa noxri 00000000 nondlp 00000000 qdepth 00000000 wqerr 00000000 err 00000000

FCP CMPL: xb 000000b1 Err 00000333- TARGET – The remote port supports the NVMeoF target feature.

- DISCSRVC – The remote port supports the NVMeoF discovery feature.

Discovering NVMeoF Subsystems

nvme discover --transport fc --host-traddr=nn-<host_node_WWN>:pn-<host_port_WWN> --traddr=nn-<target_node_WWN>:pn-<target_port_WWN>nvme discover --transport fc --host-traddr=nn-0x200000109b1be797:pn-0x100000109b1be797 --traddr=nn-0x200000109BD48EA8:pn-0x100000109BD48EA8Connecting / Disconnecting NVMeoF Devices

nvme connect --nqn=<subsystem_NQN> --transport fc --host-traddr=nn-<host_node_WWN>:pn-<host_port_WWN> --traddr=nn-<target_node_WWN>:pn-<target_port_WWN>nvme connect --nqn=nqn.2014-08.org.nvmexpress:uuid:5f81659d-927b-4a30-b76f-a4adcb3961cb --transport fc --host-traddr=nn-0x200000109b1be797:pn-0x100000109b1be797 --traddr=nn-0x200000109BD48EA8:pn-0x100000109BD48EA8NVMe Multipath

The Linux NVMe kernel subsystem implements multipathing natively, allowing multiple paths to NVMeoF targets for redundancy and performance.

Check if Multipathing is Enabled

The value "y" indicates multipath is enabled.

Check ANA (Asymmetric Namespace Access) State of a Namespace

Make sure <nvme device path> is replaced with the actual device path, e.g., /dev/nvme0n1. This will show ANA state and path information for multipathed namespaces.

SCSI Disk Timeout

Set the timeout to 80 seconds for all the SCSI devices created from the SANsymphony virtual disks.

For example:

There are two methods that can be used to change the SCSI disk timeout for a given device.

- Use the ‘echo’ command – this is temporary and will not survive the next reboot of the Linux host server.

- Create a custom ‘udev rule’ – this is permanent but will require a reboot for the setting to take effect.

Using the ‘echo’ Command (will not survive a reboot)

Set the SCSI Disk timeout value to 80 seconds using the following command:

Creating a Custom ‘udev’ Rule (permanent but requires a reboot)

Create a file called /etc/udev/rules.d/99-datacore.rules with the following settings:

SUBSYSTEM=="block", ACTION=="add", ATTRS{vendor}=="DataCore", ATTRS{model}=="Virtual Disk ", RUN+="/bin/sh -c 'echo 80 > /sys/block/%k/device/timeout' "Command:

- Ensure that the udev rule is exactly as written above. If not, this may result in the Linux operating system defaulting back to 30 seconds.

- There are four blank whitespace characters after the ATTRS {model} string which must be observed. If not, paths to SANsymphony virtual disks may not be discovered.

- Refer to the Linux Host Configuration Guide for more information.

Setting Preferred Server = ALL in SANsymphony and enabling Write Back cache for Proxmox virtual disks may cause data integrity issues on multipath NVMe-oF datastores due to unordered writes.

Serving a SANsymphony Virtual Disk to the Proxmox Node

Once the storage has been assigned to the PVE host in the SANsymphony GUI, the virtual disk whether served over iSCSI, NVMe/TCP, or NVMe/FC—must be integrated into the host. This section covers scanning, multipath verification, creating LVMs, RAW device mapping, and reconnecting storage after a reboot.

To make the virtual disk visible on the system, the connection must be scanned again. Use the following commands depending on the protocol:

This rescan is required only for iSCSI.

Once the vDisk is connected, you can verify that it is correctly detected and check its paths. For both iSCSI and NVMe (use the following command to see block devices and multipath names):

Look for devices with a multipath name like mpathX if multipathing is enabled.

For NVMe (NVMe/TCP or NVMe/FC) only

Use the 'multipath' command to determine which and whether all necessary paths are now available from the SANsymphony server for the virtual disk:

This works for only iSCSI. It shows which paths are active and associated mpathX devices.

Checking ANA (Asymmetric Namespace Access) for NVMe

You can also check the ANA (Asymmetric Namespace Access) state of NVMe subsystems. This is only for NVMe devices. It shows ANA states, namespaces, and paths, useful for multipath and failover verification.

Creating a Proxmox File-System

Creating a Physical Volume for the Logical Volume

“pvcreate” initializes the specified physical volume for later use by the Logical Volume Manager (LVM).

Creating a Volume Group

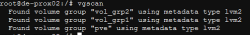

Displaying a Volume Group

Output:

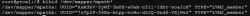

Finding out the UUID and the Partition Type

The blkid command is used to query information from the connected storage devices and their partitions. (For NVMe devices, you would use /dev/nvmeX instead of /dev/mapper/mpath*).

Output:

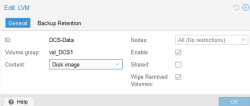

Adding LVM at the Datacenter level

To create a new LVM storage, access the PVE GUI to the datacenter level, then select Storage and click Add.

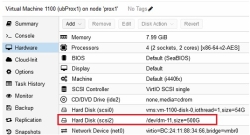

RAW Device Mapping to Virtual Machine

Follow the steps below if the prerequisites of an operating system or an application should be that a RAW device mapping into the virtual machine is necessary:

- After successfully serving the virtual disk (single or mirror) to the Proxmox (PVE) node, run a rescan to make the virtual disk visible on the Proxmox node using the following command:

This rescan is required only for iSCSI.

- Identify the virtual disk to use as a RAW device and identified multipath name “mpathX” using the following command:

The "nvme list" applies only to NVMe devices.

- Navigate to the “/dev/mapper” directory and run the

ls -lacommand to verify which dm-X the required device is linked to.

- Hot-Plug/Add physical device as new virtual disk using the following command:

qm set VM-ID -scsi<No> /dev/dm-<No> # iSCSI/SCSI-FC

qm set VM-ID -scsi<No> /dev/nvme0n1 # NVMeoF(TCP/FC)Restarting the Connection after Rebooting the Proxmox Server

After a reboot, iSCSI or NVMeoF connections may need to be restarted to make the SANsymphony virtual disks visible to the host.

Use the following commands to restart the Proxmox (PVE) Server (iSCSI services)

systemctl restart open-iscsi.service # iSCSI only

systemctl restart iscsid # iSCSI only

iscsiadm -m node --login # iSCSI onlyIf an error occurs during login (These commands are iSCSI-specific.)

iscsiadm -m node --logout # Log out of the iSCSI node first

iscsiadm -m node --login # Then log in againNVMeoF Connections

NVMeTCP

NVMe/FC

nvme connect --nqn=<subsystem_NQN> --transport=fc --host-traddr=nn-<host_node_WWN>:pn-<host_port_WWN> --traddr=nn-<target_node_WWN>:pn-<target_port_WWN>NVMeoF connections may reconnect automatically if /etc/nvme/discovery.conf or driver settings are configured correctly. Manual reconnect is needed only if the subsystem is not visible.